What is Teradata Statistics Extrapolation?

In data warehousing, populating historized tables with snapshot data valid for the current day is typical.

This is a common illustration:

SELECT Snapshot_Date,Count(*) CNT FROM The_Snapshot_Table;| Snapshot Date | CNT |

| 2015-04-10 | 10 |

| 2015-04-11 | 15 |

| 2015-04-12 | 10 |

| 2015-04-13 | 25 |

| 2015-04-14 | 15 |

| 2015-04-15 | 30 |

— We assume in our example that statistics are collected on 2015-05-15, after the daily snapshot for 2015-01-15 was loaded:

COLLECT STATISTICS ON THE_SNAPSHOT_TABLE COLUMN(SNAPSHOT_DATE);

Without extrapolation, querying the snapshot date of 2015-04-16 after loading it, the optimizer anticipates zero rows being returned (assuming statistics are not recollected). An instance of such a query is shown below: SELECT * FROM table WHERE date = ‘2015-04-16’

SELECT * FROM The_Snapshot_Table WHERE Snapshot_Date=DATE'2015-04-16'Which is Statistics Information extrapolated?

The optimizer is extrapolating the statistical information below:

- The number of table rows, based on the available statistics summary information and random-AMP sampling

- The number of distinct primary index values and the maximum value per statistic histogram

- The number of rows per value for the date column

How is the Statistics Information extrapolated?

It’s crucial to comprehend that the optimizer estimates a limited number of future dates (i.e., any date after the last recorded statistics). The highest predicted date for extrapolation relies on a random AMP sample, which indicates the number of distinct dates in the extrapolation process.

The following extrapolation formula calculates the maximum future date:

[su_shadow][su_panel]Maximum Extrapolated Date = Maximum Date with Collected Statistics + ((Maximum Date with Collected Statistics – Minimum Date with Collected Statistics) * ((Distinct Random AMP Sample Dates * Average Rows Per Date from Collected Statistics) / (Distinct Dates from Collected Statistics * Average Rows Per Date from Collected Statistics))[/su_panel][/su_shadow]The collected statistics indicate an average of 1000 rows per date, as demonstrated by this example. We assume that the random AMP sample will provide 10 distinct dates in the future.

Maximum Extrapolated Date = 2015-03-30 + ((2015-03-30 – 2015-01-01) * ((10 * 1000) / (89 * 1000)) = 2015-04-09

Assuming our query selects a date range spanning from March 25th, 2015, to April 9th, 2015, we can estimate the number of rows.

Estimated Rows from Collected Stats + Estimated Rows from Extrapolation: (6*1000) + (10*1000) = 16000 Rows.

The extrapolation has improved the estimation significantly compared to without it (where the optimizer would have only estimated 6000 rows based on the outdated statistics).

The quality of the estimation based on a random AMP sample highly depends on the skew factor of the table.

Random AMP sampling that delivers only unique future dates is an ideal scenario. However, a biased table could result in greatly inaccurate estimations of future dates.

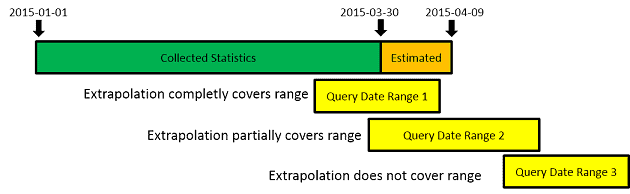

Another crucial factor is the coverage of the date range in your query by the extrapolated dates.

Ideally, the extrapolated statistics should encompass the entire query date range. However, even if only a portion of the statistics are extrapolated, including them will improve the estimated statistics overall.

Extrapolation is not beneficial if the extrapolated data does not encompass your desired timeframe.

Utilizing statistics extrapolation can be beneficial for daily snapshot tables; however, it should not replace the collection of statistics entirely. Extrapolation can aid in moving the recollection process to a less loaded point in the Teradata system.

You are right regarding point (1) and I always wondered why this may be the reason? My assumption was that during the process it’s easier as “division by zero” handling can be avoided?

Regarding point (2), as far as I remember, the calculation formula is available in the official documentation.

Hi Roland,

Interesting article, thanks. I have a couple of points/questions:

1) (minor point), AFAIK the optimizer never estimates ‘0 rows’, the minimum value it uses is 1 row or ‘1 row per amp’.

2) (question) where did you get the extrapolation calculation from?

Cheers,

Dave