1. Complete and up-to-date Statistics

At the start of Teradata SQL Tuning, statistics are a vital concern. The Teradata Optimizer employs statistics to formulate the optimal execution plan for our query.

The adequacy of statistics or dynamic AMP sampling varies according to the data demographics.

To initiate optimization, updated statistics must be provided to the optimizer. The command DIAGNOSTIC HELPSTATS ON FOR SESSION is useful for this purpose.

By running our SQL statement with EXPLAIN in a session, the optimizer will provide us with statistics at the end of each execution plan to help improve it.

Not all suggested statistics improve the execution plan. Some only alter the estimated number of rows.

It is recommended to implement these suggestions gradually, assessing their impact on the plan and avoiding unnecessary resource consumption during the collection process.

The importance of placing statistics at the forefront of our optimizations is self-evident. Each statistic gathered has the potential to alter the execution plan, potentially rendering other optimizations unnecessary or ineffective. If no statistics have been collected, the optimizer can obtain them dynamically at runtime through AMP sampling. Whether these statistics are adequate will depend on the data demographics, as elaborated upon later in this article.

Dynamic-AMP sampling

Dynamic AMP sampling is effective for optimizing tables with diverse values. It is important to ensure that the table has more rows than AMPs in the system. Generally, the more distinct values a column has, the more accurately dynamic AMP sampling represents the data demographics. Primary key columns and those defined as UNIQUE are ideal for this technique. However, it is not recommended for heavily skewed columns.

There is a high risk that the optimizer may select a sample from an AMP that is not representative, thereby overestimating or underestimating the number of rows. Therefore, UPI and USI are ideal candidates for dynamic AMP sampling due to their aforementioned benefits. Dynamic AMP sampling can only be used on indexed columns.

Gathering statistics for all non-indexed columns used in joins, WHERE conditions, or aggregations is crucial. If the optimizer does not possess statistics on a non-indexed column, it must rely on heuristics to estimate the number of selected rows. However, heuristics are rules of thumb that mostly result in erroneous estimates.

Below is an extensive list of scenarios where dynamic AMP sampling is satisfactory:

- USIs or UPIs used with equality predicates (such as WHERE conditions)

- NUSIs with an even distribution of column values

- NUPIs with an even distribution of column values

Fully collected Statistics

Complete statistics are necessary for columns with skewed or low distinct values.

Here is a comprehensive list for collecting statistics:

- Non-indexed columns used in predicates (such as WHERE conditions)

- All NUSI with an uneven distribution of column values

- USIs and UPIs if used in non-equality predicates or range constraints)

- NUPIs with an uneven distribution of column values

- Join columns

- Small tables with fewer rows than AMPs

- The dummy column “PARTITION” for all tables

- Partitioning columns of row-partitioned tables

Statistics for Row-Partitioned Tables

The Teradata Optimizer requires specific statistics for PPI tables.

Collect the following statistics on PPI tables:

Dummy Column “PARTITION”

The “PARTITION” dummy column’s statistics indicate the number of empty partitions to the optimizer.

Dummy column “PARTITION” + PI column

When the partitioning columns are not included in the primary index, it is necessary to obtain these statistics. This is because identical primary index values can appear in multiple partitions. The statistics are gathered on a fictitious column named “PARTITION” plus the primary index, which assists the optimizer in estimating the expenses associated with the sliding window and rowkey-based merge join and the dynamic partition elimination steps.

Teradata Multicolumn Statistics

Single and multicolumn statistics each offer unique advantages and limitations. Single statistics provide greater flexibility for the Optimizer, while multicolumn statistics expose correlations between columns that the Optimizer can leverage. Nevertheless, multicolumn statistics are less universally applicable than single-column statistics.

The Optimizer utilizes statistics for (A, B) solely in the case of predicates on (A) or (A, B), not if the predicate involves solely B (e.g., “WHERE column = B”). In addition, the Optimizer can make use of individual statistics for (A) and (B) when predicates involve (A), (B), or (A, B).

Here are the guidelines for utilizing multicolumn statistics:

- Columns that are used together with equality predicates.

- Columns are used for joins or aggregations with some degree of correlation. The more the combination of actual values is related, the more significant the importance of collecting multicolumn statistics is in this situation.

Identify Stale Statistics

Identifying stale statistics can be achieved through various techniques. The simplest approach involves dividing the SQL statement and performing individual tests on each partial statement. An overview can be accomplished by comparing the estimated row count (displayed in the Explain output) with the actual number of query results.

This approach is optimal for SQL statements that take an excessive amount of time to execute.

Here’s an example:

SELECT t01.* FROM <Tablename1> t01 INNER JOIN <Tablename2> t02 ON t01.PK = t02.PK WHERE t01.<Column name> = 1 AND t02.<Column name> = 2; The above query can be divided into two parts for testing: SELECT * FROM <Tablename1> WHERE <Columnnname> = 1; SELECT * FROM <Tablename2> WHERE <Columnnname> = 2;Obsolete statistics may result if the number of rows returned from executing the sub-queries significantly differs from the estimate provided in the EXPLAIN statement.

2. Teradata Primary Index Choice

Optimizing Teradata SQL requires selecting the optimal Primary Index. To allow the Optimizer to utilize the primary index in joins, design queries with all join columns included in the primary index. If even one column is missing from the join condition, the resulting row hash will differ, regardless of the order in which those columns are defined within the primary index. However, additional columns may be included in the join condition. Residual conditions are then applied after locating the rows using row hash.

To join tables, they must have matching primary indexes for their rows to be on the same AMP. The Optimizer can execute a direct join without redistributing rows when this condition is met.

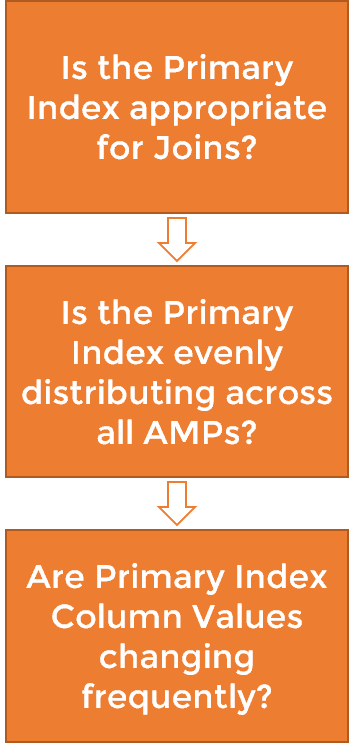

A volatile table can be created if we cannot alter the primary indexes or require a specific primary index for a particular SQL statement. This table must have an identical structure and content as the original table but with a distinct primary index. When selecting the primary index, three factors must be considered: even data distribution, compatibility for join operations, and minimal volatility.

An equitably distributed Primary Index guarantees simultaneous start and finish of work across all AMPs, encapsulating the essence of parallel data processing.

3. Teradata SQL Tuning with Indexing & Partitioning

Indexes or partitioning can enhance query performance.

Teradata provides a variety of indexes, each with its own pros and cons. The appropriate index for a workload is determined by its nature.

The Teradata Secondary Index

Secondary indexes can be categorized as unique (USI) or non-unique (NUSI), each with distinct functionalities beyond their level of uniqueness.

The Teradata USI

The USI resembles the Unique Primary Index in that the subtable’s Index Rows are uniformly distributed and organized by RowHash across all AMPS.

If the USI includes all WHERE condition columns, the owning AMP of the index row can be determined. This index row contains the ROWID of the desired base table row, allowing for direct access. The USI is a high-performance option for accessing individual rows, making it ideal for tactical workloads.

The Teradata NUSI

The NUSI is not distributed based on a Rowhash. NUSI rows are consistently stored with the corresponding base table row on the AMP. As a result, NUSI access always involves all AMPs.

The NUSI index rows are sorted either by rowhash or integer value. Sorting by an integer value, which can include a date as an internal data type, is advantageous for the NUSI because it is well-suited for range scans, such as retrieving all dates in a certain month.

The Teradata Row-Partitioned Table

Partitioning in Teradata is an alternative method of organizing rows on the mass storage device instead of secondary indexes, which are stored in a separate sub-table and consume additional space.

Partitioned table rows are distributed by rowhash to AMPs, assigned to their respective partitions, and sorted accordingly. The rows within each partition are sorted by rowhash and arranged on the disk to allow for full cylinder scanning.

Partitioning a table and utilizing a secondary or join index may be more suitable based on the workload. Additional indexes can also be created for partitioned tables.

Partitioned tables are commonly utilized for strategic queries that involve aggregating data sets, such as sales data for the current year.

Disadvantages of Indexing & Partitioning

When utilizing indexing techniques, it is imperative to consider the overall architecture of the data warehouse to determine if the solution is appropriate. Indexes have the potential to impede the ETL process due to various factors.

To load tools like Fastload, removing any secondary or join indexes must be removed beforehand.

Teradata is responsible for managing the index sub-tables. When executing Insert, Delete, and Update statements, it is necessary to maintain both the index sub-table and the actual table.

Drop potentially useful indexes that are not utilized by the optimizer and not beneficial to the overall PDM design to avoid wasting space and resources.

4. Query Rewriting

Rewriting a query can enhance its performance.

I view SQL statements as black boxes and prioritize technical methods for optimization.

Below are some query rewriting examples:

- EXISTS instead of IN

- Splitting a large SQL statement into smaller parts

- UNION ALL instead of UNION

- DISTINCT instead of GROUP BY

Since I don’t need to comprehend the content and business logic of a query, I don’t need to contact its author. Typically, technical optimizations are not very risky.

I delve into business logic once I’ve exhausted technical optimization. Comprehending business logic reveals further chances for refinement.

Rewriting queries can often resolve performance issues, even when other attempted methods have been unsuccessful.

5. Teradata SQL Tuning with Real-Time Monitoring

To optimize Teradata SQL performance, it is essential to monitor the query execution in real time. One can identify crucial steps by observing the query’s progress through Viewpoint during runtime.

When using Viewpoint, follow these steps:

- Steps that take a long time to finish consume a lot of resources.

- Steps that are skewed, especially if the skew factor reaches 99%, as this means that all work is done by only one AMP or a few AMPs.

Analyzing the bad query

We must consider the underlying reason for the faulty query stage. Here are some suggestions:

- Does the base table have a skewed primary index (static skew)?

- Does the redistribution of rows cause skew (dynamic skew)?

- Are massive amounts of data redistributed because of poor join preparation due to missing or stale statistics?

- Are there several hash collisions during the execution of the INSERT statement?

Outdated or incomplete statistics can result in erroneous decisions when preparing joins, such as duplicating a table across all AMPs instead of rehashing or selecting an incorrect join method, such as using a product join instead of a merge join.

6. Comparison of Resource Usage

Measuring resource consumption before and after query optimization is an important task in Teradata SQL tuning.

Query run times can be misleading due to concurrent workloads’ impact on other queries beyond our control.

When performing tuning, we prefer utilizing absolute key figures that are independent of the activities running on our Teradata system.

To obtain a comprehensive analysis of the effectiveness of each step in optimizing an SQL statement, execute the following query. Access to table DBC.DBQLOGTBL (or related views) with read permissions are required.

Assign each optimized query with a unique query band to identify it in DBC.DBQLOGTBL.

SET QUERY_BAND = 'Version=1;' FOR SESSION; SELECT AMPCPUTIME, (FIRSTRESPTIME-STARTTIME DAY(2) TO SECOND(6)) RUNTIME,SPOOLUSAGE/1024**3 AS SPOOL_IN_GB,CAST(100-((AMPCPUTIME/(HASHAMP()+1))*100/NULLIFZERO(MAXAMPCPUTIME)) AS INTEGER) AS CPU_SKEW, MAXAMPCPUTIME*(HASHAMP()+1) AS CPU_IMPACT,AMPCPUTIME*1000/NULLIFZERO(TOTALIOCOUNT) AS LHR FROM DBC.DBQLOGTBL WHERE QUERYBAND = 'Version=1;' ;The query above will retrieve the subsequent measures:

- The total CPU Usage

- The Spool Space needed

- The LHR (ratio between CPU and IO usage)

- The CPU Skew

- The Skew Impact on the CPU

We aim to reduce overall CPU utilization, spool space consumption, and CPU skewing. The ideal LHR value is approximately 1.00.

Check out our related articles:

Winning Tactics For Teradata Sample StatisticsTactical Workload Tuning on TeradataThe Primary Index ChoiceTutorial Point Tuning Ideas

Hi Roland,

Is it fine to collect stats on all columns recommended by DIAGNOSTIC command ?

Is there any specific way to know as to which stats recommendations should be implemented and which ones to skip ?

I generally collect stats on single column stats recommendations and skip the multi-column stats. But there have been instances where multi-column stats recommendations helped after implementation.

Regards,

Dhruba

Not all recommended statistics are needed. I follow a similar approach. Especially when it comes to multi-column statistics we have to consider that their application is limited compared to single column stats. While the optimizer can use the first part of a statistic on columns (a,b) i.e. the “a”, it will not be able to use a query which, for example, has a WHERE condition only on column “b”. Of course, the ideal scenario for the statistic on columns(a,b) is a WHERE condition ob both columns.

It’s similar to statistics on functions, such as on CASE statements. They are only useful for a very specific kind of query which matches exactly this function.

INSERT INTO eitopsdb.GTT_srv_ft_data_grp_nbr_rk

SELECT BASE.srv_accs_id

,BSS.data_grp_nbr

FROM Eitopsdb.srvft_blstmt_usgvol_fltavg_rk BASE INNER JOIN

EDWVIEWS.bl_stmnt_sub BSS

ON

BASE.srv_accs_id = BSS.srv_accs_id

— WHERE bl_cyc_dt BETWEEN DATE -31 — insure one month ago

— AND DATE -1 — Yesterday

QUALIFY 1 = ROW_NUMBER() OVER

(PARTITION BY BSS.srv_accs_id

ORDER BY BSS.bl_cyc_dt DESC, BSS.data_grp_nbr DESC)

Could anybody help in tuning this query??

It is failing with below error-

*** Failure 3156 Request aborted by TDWM. Exception criteria exceeded: Spool Usage.

Statement# 1, Info =0

Hi – The duplicate filtering should be taken care of before participating in joining operations. Share the explain plan of this Query for more detail.