1. Outline

This showcase demonstrates optimizing statements with multiple JOINs using Teradata Optimizer’s tuning approach. The approach efficiently determines the best JOIN strategy and implements data redistribution instead of duplication when necessary.

Identify and break down underperforming segments to optimize complex logic with multiple joins. Employ an execution plan and monitor query performance and resource utilization to ascertain the most efficient join sequence for optimal performance.

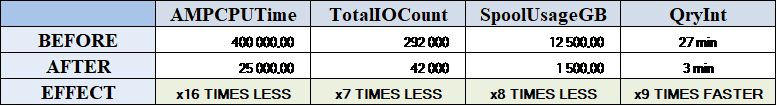

The results are remarkable. Optimizing JOINs significantly reduced resource utilization by multiple orders.

In some instances, tuning a query with numerous joins and logic proves to be intricate, hence breaking it down into sequential steps could be advantageous. This renders it more convenient in terms of monitoring performance, transparency, and execution plan.

2. Heavy load Statement with lots of JOINs inside

This is an actual query executed in our production environment:

- Heavy queries run daily, which consume lots of resources;

- Takes place at ETL Staging area (pre-detailed layer);

- Source system entries row are enriched with surrogate keys and ETL metadata;

There are 12 LEFT JOINs occurring inside.

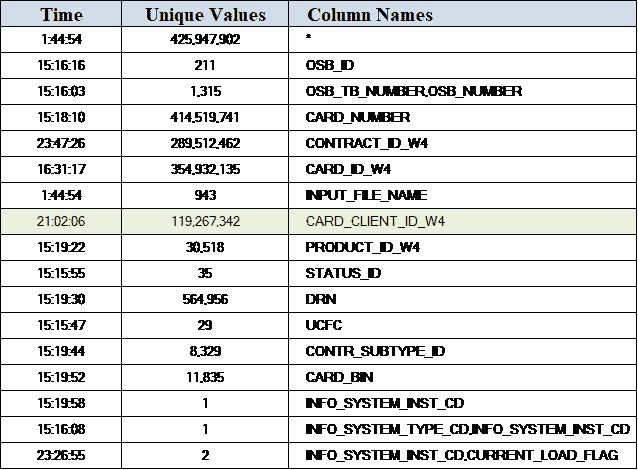

The cumulative staging table (425M+ rows) is JOINed to Surrogate Keys tables (the largest is 125M+ rows).

Take a look at the source code:

LOCKING ROW FOR ACCESS

SELECT

_S.*

SK_ACC_DEV.TGT_ID,

SK_PTY_CLIENT.TGT_ID,

SK_AGRMNT.TGT_ID,

SK_PTY_VSP.TGT_ID,

SK_PTY_OSB.TGT_ID,

SK_PROD.TGT_ID,

SK_ACC_DEV_REASON_TYPE.TGT_ID,

SK_DRN.TGT_ID,

SK_UCFC.TGT_ID,

SK_CONTR_SUBTYPE.TGT_ID,

SK_CARD_BIN.TGT_ID,

FROM SANDBOX.S0160000010008_CARD _S

LEFT JOIN SANDBOX.K_AGRMNT_NK07 AS _SK_AGRMNT

ON (_SK_AGRMNT.NK07_AGREEMENT_ID_W4 IS NOT NULL

AND _SK_AGRMNT.NK07_AGREEMENT_ID_W4=_S.CONTRACT_ID_W4 )

LEFT JOIN SANDBOX.K_PTY_NK12 AS _SK_PTY_CLIENT

ON (_SK_PTY_CLIENT.NK12_WAY4_CLIENT_ID IS NOT NULL

AND _SK_PTY_CLIENT.NK12_WAY4_CLIENT_ID=_S.CARD_CLIENT_ID_W4 )

LEFT JOIN SANDBOX.K_PROD_NK03 AS _SK_PROD

ON (_SK_PROD.NK03_PRODUCT_ID_W4=_S.PRODUCT_ID_W4 )

LEFT JOIN SANDBOX.K_ACCESS_DEVICE_NK10 AS _SK_ACC_DEV

ON (_SK_ACC_DEV.NK10_CARD_NUMBER IS NOT NULL

AND _SK_ACC_DEV.NK10_CARD_NUMBER=_S.CARD_NUMBER )

LEFT JOIN SANDBOX.S0160000010001_BRANCH _B

ON (_S.OSB_TB_NUMBER=_B.TB_OLD_NUMBER

AND _S.OSB_NUMBER=_B.OSB_NUMBER )

LEFT JOIN SANDBOX.K_PTY_NK13 AS _SK_PTY_VSP

ON _SK_PTY_VSP.NK13_VSP_FULL_NUMBER = _B.TB_NUMBER

LEFT JOIN SANDBOX.K_PTY_NK11 AS _SK_PTY_OSB

ON SK_PTY_OSB.NK11_TB_NUMBER = B.TB_NUMBER

LEFT JOIN SANDBOX.K_ACCESS_DEVICE_RSN_TYPE_NK01 AS _SK_ACC_DEV_REASON_TYPE

ON (_SK_ACC_DEV_REASON_TYPE.NK01_W4_STATUS_ID=_S.STATUS_ID

AND _SK_ACC_DEV_REASON_TYPE.NK01_W4_STATUS_ID IS NOT NULL)

LEFT JOIN SANDBOX.K_ACCESS_DEVICE_CLASS_VAL_NK05 AS _SK_DRN

ON (_SK_DRN.NK05_DRN=_S.DRN

AND _SK_DRN.NK05_DRN IS NOT NULL )

LEFT JOIN SANDBOX.K_ACCESS_DEVICE_CLASS_VAL_NK03 AS _SK_UCFC

ON (_SK_UCFC.NK03_UCFC=_S.UCFC

AND _SK_UCFC.NK03_UCFC IS NOT NULL )

LEFT JOIN SANDBOX.K_CONTR_SUBTYPE#WAY4_NK01 AS _SK_CONTR_SUBTYPE

ON (_SK_CONTR_SUBTYPE.NK01_CONTR_SUBTYPE_ID=_S.CONTR_SUBTYPE_ID

AND _SK_CONTR_SUBTYPE.NK01_CONTR_SUBTYPE_ID IS NOT NULL )

LEFT JOIN SANDBOX.K_ACCESS_DEVICE_CLASS_VAL_NK04 AS _SK_CARD_BIN

ON (_SK_CARD_BIN.NK04_CARD_BIN=_S.CARD_BIN

AND _SK_CARD_BIN.NK04_CARD_BIN IS NOT NULL );

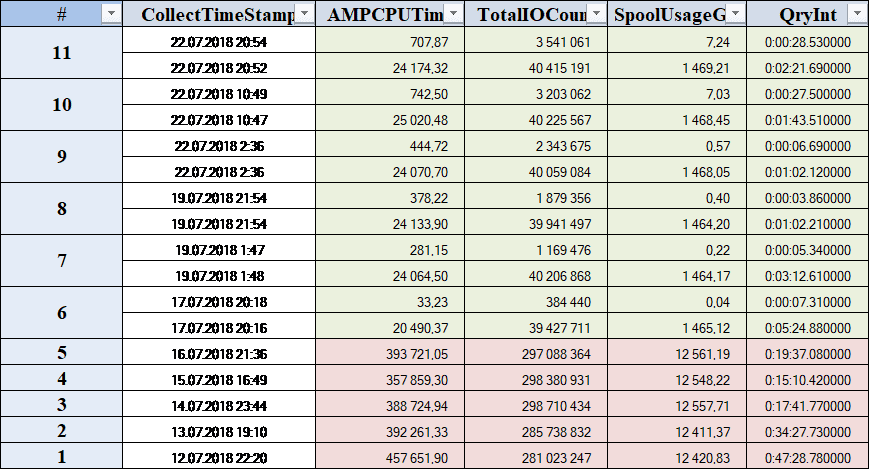

3. Poor-performing query needs to be reviewed

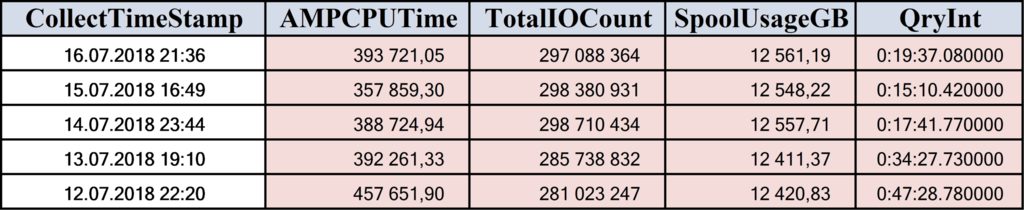

As per the Query Log, the query has exhibited inadequate performance indicators and tends to rank among the statements with poor performance.

It has only grown in resource consumption recently, so it needs to be reviewed and tuned.

Resources are being inefficiently utilized as this session alone occupies 40-45% of the CPU time for the entire ETL process of the source system.

Examine the query’s performance.

4. Examine the execution plan

Improving performance through query tuning is a complex endeavor. Where should we begin?

To begin, let’s delve further into the Execution Plan and pinpoint the specific section of the underperforming query.

Here we see the JOIN with the clients’ surrogate key table consisting of over 125 million rows, with data evenly redistributed across all AMPs. However, it should be noted that this join requires data duplication on all AMPs.

10) We do an all-AMPs RETRIEVE step from a single partition of

SANDBOX._SK_PTY_CLIENT with a condition of … into Spool 26 (all_amps)

which is duplicated on all AMPs with hash fields …

Then we do a SORT to order Spool 26 by row hash. The result spool file will not be cached in memory. The size of Spool 26 is estimated with no confidence to be 230,896,636,992 rows (10,852,141,938,624 bytes). The estimated time for this step is 16 minutes and 22 seconds.5. Approach: Statistics, JOIN INDEX, Sequence of JOINs

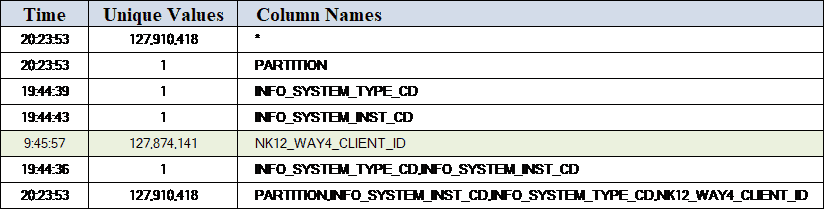

- Statistics gathering

Ensure accurate statistics are present. Is it necessary to collect additional data if any statistics are absent in the connecting fields?

COLLECT STATISTICS COLUMN (PARTITION

, INFO_SYSTEM_INST_CD

, INFO_SYSTEM_TYPE_CD

, NK12_WAY4_CLIENT_ID)

ON SANDBOX.K_PTY_NK12 ;

HELP STATISTICS SANDBOX.K_PTY_NK12;

HELP STATISTICS SANDBOX.S016_0008_CARD;

But still, Teradata Optimizer decides to perform table duplication and does not change the execution plan.

- Introducing JOIN INDEX

Should we proceed to create a Join Index – the Teradata-specific way of materializing views?

I have tried a couple of different JI definitions, but unfortunately, Teradata Optimizer would not use it for specific reasons. Furthermore, it would add significant overhead, so it might not be the best option.

CREATE JOIN INDEX SANDBOX.JI_CC0160000010008_CARD

AS

SELECT

_S.CARD_ID_W4

, _S.CARD_CLIENT_ID_W4

, _SK_PTY_CLIENT.NK12_WAY4_CLIENT_ID

, _SK_PTY_CLIENT.TGT_ID

FROM SANDBOX.S0160000010008_CARD /*SANDBOX.S016_0008_CARD*/ _S

INNER JOIN SANDBOX.K_PTY_NK12 AS _SK_PTY_CLIENT

ON (_SK_PTY_CLIENT.NK12_WAY4_CLIENT_ID IS NOT NULL

AND _SK_PTY_CLIENT.NK12_WAY4_CLIENT_ID=_S.CARD_CLIENT_ID_W4 );

- JOIN sequence manipulation

Finally, let us try to force a particular sequence of joining tables and see the results. So how do you do this? You can decompose this large query into two consequent ones:

1) First, perform all the JOINs except one causing bad performance

2) JOIN intermediate result to the last large table

How is the sequence of joins determined? It depends on Teradata’s cost-based optimization. There are no HINTs as you have in Oracle to force the optimizer to execute the statement the way you want.

Dividing the initial statement into two subsequent ones would compel Teradata to follow a specific join order, resulting in optimal outcomes.

Comment out the JOIN condition and include it in the second step.

/* LEFT JOIN SANDBOX.K_PTY_NK12 AS _SK_PTY_CLIENT

ON (_SK_PTY_CLIENT.NK12_WAY4_CLIENT_ID IS NOT NULL

AND _SK_PTY_CLIENT.NK12_WAY4_CLIENT_ID=_S.CARD_CLIENT_ID_W4) */

6. Improved performance with data redistribution

Here is the new EXPLAIN Plan excerpt for the second step of the query utilizing data redistribution and performing Hash JOIN:

3) We do an all-AMPs RETRIEVE step from a single partition of SANDBOX._SK_PTY_CLIENT with a condition of … into Spool 25 (all_amps) Spool 25 is redistributed by hash code to all AMPs The size of Spool 25 is estimated with low confidence to be 127,257,826 rows. The estimated time for this step is 0.58 seconds. 11) We do an all-AMPs JOIN step from Spool 2, which is joined to Spool 23 Spool 25 is used as the hash table and Spool 23 is used as the probe table in a right outer joined using a classical hash join of 2 partitions with a join condition of … The size of Spool 28 is estimated with low confidence to be 387,595,663 rows ( 815,113,679,289 bytes). The estimated time for this step is 16.71 seconds.The results are exceptional, with performance indicators increasing significantly to utilize the Teradata capacity fully.

Here are the performance metrics before and after optimization. I’ve combined two consecutive steps into one for easier reference.

Artemiy Kozyr is Data Engineer at Sberbank, Moscow, with a Master’s Degree in CS.

He has five years of Experience in Data Warehousing, ETL, and Visualization for Financial Institutions.

Contacts:

Great performance tuning example. Thank you very much for this one!