Our previous article defined workload and explained how Teradata workload management categorizes queries. We briefly noted that grouping requests are primarily driven by resource allocation of CPU seconds and IOs.

For those unfamiliar with workload management, I strongly suggest reading the first installment of our series on the topic.

Teradata Workload Management – The Basics

The article will analyze the Teradata priority scheduler and its allocation of resources to workloads and requests. The priority scheduler is accountable for assigning available resources to active requests.

Teradata 14 integrates the Linux priority scheduler of SLES11, known as the “completely fair scheduler” (CFS), into its priority scheduler. Our upcoming article will provide further information on how the CFS allocates resources, including CPU seconds and frequency.

We will not discuss prior versions of the Teradata priority scheduler for a simple reason: The share of Teradata 13 sites running on SLES 10 is rapidly decreasing, and this information would be obsolete soon.

The Priority Scheduler Hierarchy

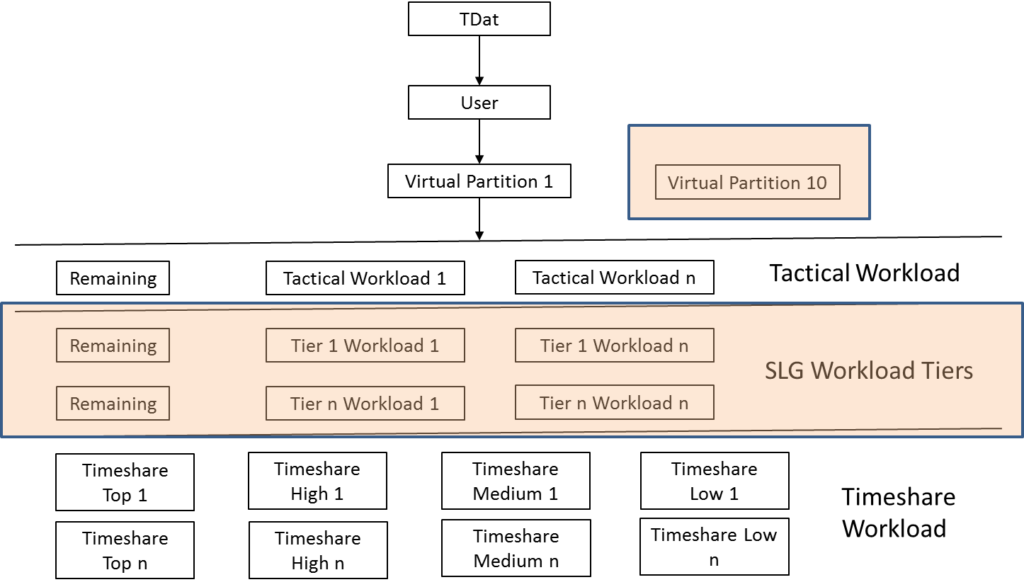

The CFS utilizes hierarchies to implement priorities, which is also the case with the Teradata priority scheduler since it is built on top of the CFS. A typical hierarchy for the Teradata 14 priority scheduler is shown in the graphic below.

Teradata systems incorporate workload management, namely Teradata Active Workload Management (TASM) or Teradata Integrated Workload Management (TIWM). TASM is the more sophisticated option, providing additional features.

The above graphic illustrates the contrasting priority scheduler hierarchies of TASM and TIWM (the divergences are highlighted in orange). While TIWM solely provides one virtual partition, it does not support SLG workload tiers.

Resource Allocation Rules

Resources are allocated hierarchically, from top to bottom, in a straightforward and refined manner.

Assign the most crucial workload to the top of the hierarchy and the least significant workload to the bottom, following a straightforward principle.

Teradata workload management comprises three primary levels: Tactical, SLG (TASM exclusively), and Timeshare. These levels are where workloads are defined, each offering distinct features. In the following sections, we will discuss these levels comprehensively.

Every level can access the resources required for executing its tasks. However, a safety mechanism is in place to prevent scenarios where the upper levels exhaust resources, and the lower levels are left with none.

The system defines the workload on the tactical and SLG tiers, reserving a minimum of 5% of resources for lower levels in the hierarchy. These workload definitions facilitate resource allocation between levels, enabling the flow of available resources downwards.

The resources allocated for the “remaining” dummy workload may vary, depending on the system’s setup and the current load situation. It is strongly advised to allocate higher resources for this task.

Resources from the tactical and SLG tiers will cascade down to lower levels of the hierarchy.

Assign your most critical requests to the top tier of the hierarchy, specifically the tactical tier, to ensure efficient allocation of resources. To prevent the possibility of lower tiers being deprived of resources due to prioritization at higher levels, a safety mechanism is in place:

The different levels of the Priority Hierarchy

The priority hierarchy comprises the following levels:

- TDAT

- User

- One or several virtual partitions (TIWM: always one)

- A tactical workload tier

- Between 0 and n SLG workload tiers (TASM only)

- One timeshare tier

The TDAT Priority Level, User, and System Tasks

At the hierarchy’s pinnacle stands TDAT, with USER, DFLT (Default), and SYS (System) following closely behind. Teradata system tasks run on DFLT and SYS, while user-initiated tasks fall below USER. SYS and DFLT tasks take precedence in resource allocation before any resources are allocated to user tasks. Both SYS and DFLT are responsible for executing critical system-related tasks.

The USER level comprises only tasks performed by database users, excluding any internal tasks.

Splitting the System -Teradata Virtual Partitions

Virtual partitions are exclusive to Teradata systems operating on SLES 11, given their association with SLES11 priority hierarchies. This functionality is solely accessible on Teradata 14 or later systems.

Virtual partitions aim to divide the highest level of system resources.

Virtual partitions are commonly employed by multiple business owners sharing a Teradata system. Each owner can fully utilize a portion of the system resources. It is important to note that the allocated share is not exclusively reserved. If a virtual partition is not fully utilizing its assigned resources at a given time, other partitions may access them.

TIWM strictly implements a single virtual partition without allowing system splitting, as previously stated. To avail of this feature, TASM is required.

Multiple workloads can be assigned to each virtual partition.

The Tactical Workload Tier

The lower priority level is reserved for tactical workloads, which may utilize all available resources except for the allocated 5% for the “remaining” workload.

Tasks assigned at the tactical level offer a distinct advantage: they are automatically expedited and can utilize reserved AMP worker tasks (AWTs). Additionally, these tasks can promptly interrupt others and take over the CPU, with the suspended tasks resuming afterward. Although this feature is not exclusive to tactical workloads, only these tasks can immediately access the CPU. Tasks from other levels can generally only access the CPU by interrupting running tasks if the interrupted task consumed significantly more CPU than the interrupting one.

Assigning resource-intensive and long-running queries to tactical workloads can completely isolate lower-level workloads from necessary resources.

Assign only legitimate tactical requests to the tactical tier, such as Single-AMP or group-AMP operations.

The Teradata workload management incorporates a security mechanism – tactical workload exceptions – to mitigate this risk.

Tactical workload exceptions enable the transfer of requests from the tactical tier to a lower hierarchy level. This process is automatic and cannot be disabled. It occurs when a request consumes a specific amount of CPU seconds, which can be adjusted.

Monitor this exception to identify frequent requests that are causing it. Move these requests to a lower-priority workload.

The SLG Workload Tier

Below the tactical tier, the SLG tiers (TASM) or timeshare tier (TIWM) are located.

Each workload within the SLG tier has a distinct weight, dictating its resource consumption percentage and serving as the maximum resource limit, except for one exception, which will be elaborated upon shortly. While requests executed within this workload may use fewer resources.

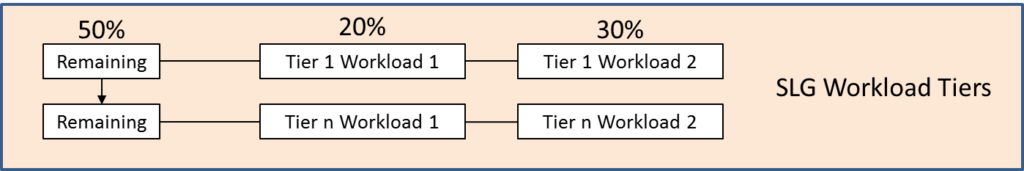

The graphic below demonstrates that 20% of “Tier 1” resources are dedicated to “Workload 1”, while “Workload 2” receives 30%.

If some workloads in an SLG tier cannot utilize their assigned resources, the surplus will be evenly distributed among other workloads that require them. Any remaining resources will then be assigned to the lowest tier in the hierarchy.

50% of the resources allocated to the “remaining” workload will be directed to the tier below tier 1, which may either be another SLG level or timeshare, depending on the configuration. Suppose 50% of the resources assigned to tier 1 (20% + 30%) cannot be utilized, and there is no possibility of reassigning resources among workloads on tier 1. In that case, all unutilized resources will be included in the 50% allotted to the “remaining” workload.

If there are no active requests on tier 1, all of its resources will be passed down to the level below, such as another SLG tier or the timeshare tier. It is crucial to note that we are referring to relative weights. Specifically, “Workload 1, Tier 1” can only utilize up to 20% of the resources allocated to the previous tier, not the entire Teradata system.

Note that all active requests within a workload share the same weight. When a single request is active in “Workload 1 Tier1,” it can utilize up to 20% of the available resources. However, if two requests are active, they can each use a maximum of 10%. If the two requests collectively consume the available 20%, they must share the same resource. Therefore, the concurrency within a workload significantly affects the resources allocated to each task.

The perceptive reader would have noticed that the tactical tier does not employ relative weights. All workloads in the tactical tier have unrestricted access to all resources at their disposal. The concept behind this is that exclusively tactical requests are present in the tactical tier.

A final cautionary note: Minimize the number of SLG tiers. The greater the amount of SLG tiers, the less consistent the runtime behavior of your requests will be. This is because requests on lower levels of the hierarchy rely on what remains from higher hierarchy levels.

Avoid the temptation to allocate excessive resources to the highest SLG levels. Instead, prioritize efficiently distributing resources to meet critical workloads. Remember that any unused resources that reach the timeshare tier will be allocated to necessary workloads.

The Timeshare Tier

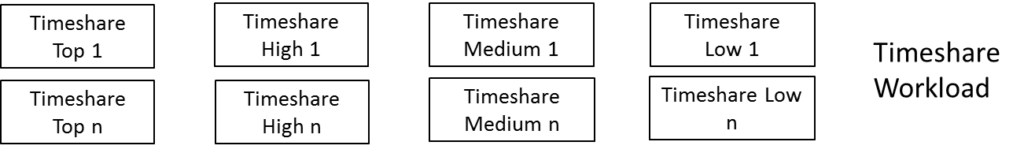

The timeshare tier is the lowest in the priority hierarchy and is offered in both TASM and TIWM. Unlike the SLG tiers, which are exclusively available in TASM, they don’t have to be used at all.

Resources from the lowest SLG tier, also known as the tactical tier in TIWM or in cases where SLG tiers are undefined in TASM, are now accessible in the timeshare tier. However, resource allocation for timeshare workload is implemented differently.

The timeshare system has four resource allocation weights that comprise its tier.

Top, High, Medium, and Low.

Between these four levels, fixed ratios are defined. No relative weights per workload are used like on the SLG tier(s):

- Each request in a group “Top” workload can consume eight times more than any request of the group “Low.”

- Each request in a workload of group “High” can consume four times more than any request of a workload of the group “Low.”

- Each request in a group “Medium” workload can consume two times more than any request of the group “Low.”

This rule is valid across all workloads defined on the timeshare tier.

Requests from workloads within an SLG tier vie for the resources allocated to the tier. Meanwhile, all timeshare requests from specified workloads compete for the total resources allocated to the timeshare tier.

“Top” requests receive eight times the resources compared to “Low” requests, regardless of the number of active requests in the four levels (top, high, medium, low). All workloads in the timeshare tier share resources.

Once the remaining resources hit the timeshare level, two options arise:

If a workload defined as a timeshare consumes all available resources, 100% of the system’s resources have been distributed and utilized. Alternatively, if the timeshare workloads do not consume all available resources, these remaining resources will be allocated to other workloads that require them.