Introduction

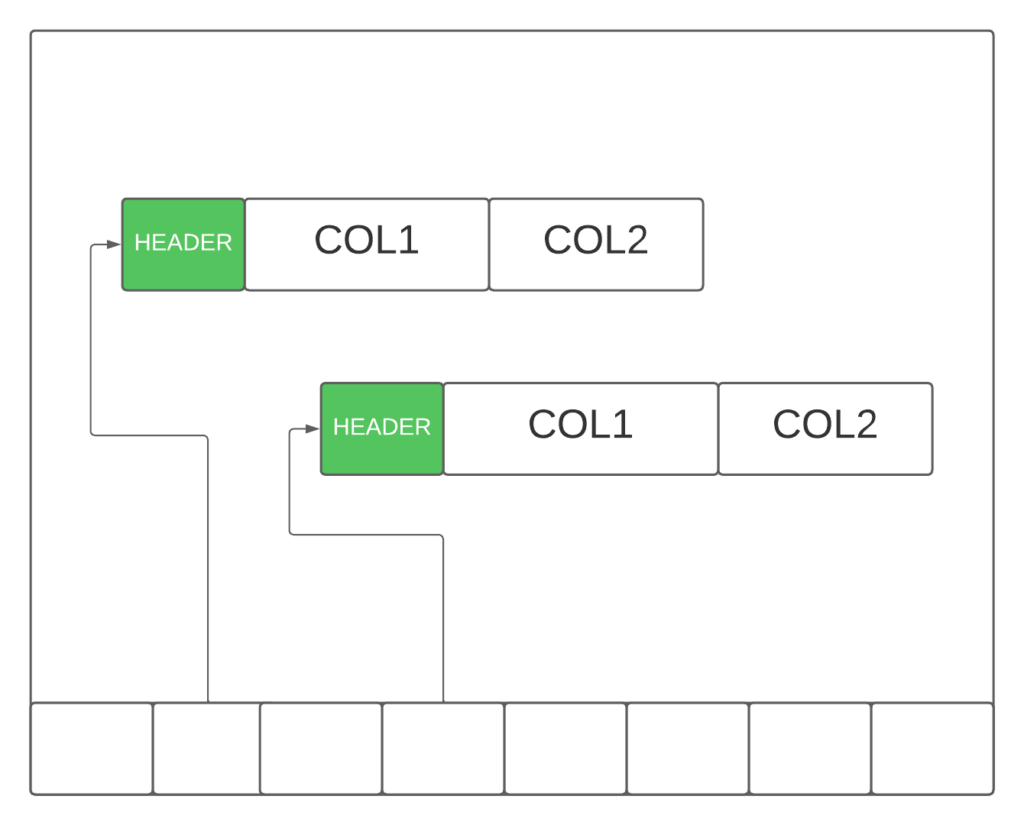

In a row-oriented database engine like Teradata, data is organized and stored in units called data blocks. Each data block features a fixed header and accommodates multiple rows. Every row consists of a record header followed by its corresponding columns.

When a database retrieves and stores a data block in the cache, it accesses all rows and columns. This raises some intriguing questions: Why is Teradata SELECT * considered slow and resource-intensive if all columns are already available in memory? Is its performance as poor as it is often portrayed? What factors contribute to this perception? Join us as we investigate these questions and unravel the mysteries surrounding Teradata SELECT * and its impact on database performance.

No Index Usage

Optimization using SELECT * in queries can prevent the database optimizer from employing index-only scans. For instance, let’s say you need to retrieve the CustomerId of customers who made purchases exceeding $1,00, and there is an index on the purchase amount column that includes the CustomerId. This index is suitable for such a query.

However, by utilizing Teradata SELECT * and requesting all fields, the database must access the main table data block to gather the remaining fields. Consequently, this leads to substantially more I/O operations. In contrast, if you had avoided using Teradata SELECT *, the database could have efficiently scanned the index and returned the pertinent CustomerIds, optimizing the query’s performance.

Here is a more complex example involving a join between two tables:

CREATE SET TABLE TheDatabase.TheTable1

(

REF_PK BIGINT NOT NULL,

COL1 CHAR(20) NOT NULL,

COL2 INTEGER,

…

COL99 CHAR(100)

) PRIMARY INDEX ( COl1 );

CREATE MULTISET TABLE TheDatabase.TheTable2

(

PK BIGINT NOT NULL,

COL1 CHAR(20) NOT NULL,

COl2 INTEGER,

…

COL20 INTEGER

) PRIMARY INDEX ( COL1 )

INDEX ( REF_PK )

;In scenarios where two tables possess distinct primary indexes, it is necessary to redistribute or copy the rows to a common AMP to execute the join operation. To address this, during our test case, we created a Non-Unique Secondary Index (NUSI) on the join column (REF_PK) of “TheTable2.”

Our starting point involves a Teradata SELECT * query that fetches all columns from both tables:

EXPLAON

SELECT *

FROM

TheDatabase.TheTable1 t01

INNER JOIN

TheTable2 t02

ON

t01.RELATED_PK = t02.PK

;

3) We execute the following steps in parallel.

1) We do an all-AMPs RETRIEVE step from Q_DBS_SGL_WORK.TheTable2

in view TheDatabase.TheTable2 by way of an all-rows scan with

no residual conditions into Spool 2 (all_amps) (compressed

columns allowed) fanned out into 10 hash join partitions,

which is redistributed by the hash code of (

TheDatabase.TheTable2.PK) to all AMPs. The size of

Spool 2 is estimated with high confidence to be 16,206,188

rows (1,798,886,868 bytes). The estimated time for this step

is 7.86 seconds

2) We do an all-AMPs RETRIEVE step from TheDatabase.t01 by way

of an all-rows scan with no residual conditions into Spool 3

(all_amps) (compressed columns allowed) fanned out into 10

hash join partitions, which is redistributed by the hash code

of (TheDatabase.t01.RELATED_PK) to all AMPs. The input table

will not be cached in memory but is eligible for

synchronized scanning. The result spool file will not be

cached in memory. The size of Spool 3 is estimated with high

confidence to be 25,914,926 rows (17,259,340,716 bytes). The

estimated time for this step is 1 minute and 13 seconds.

4) We do an all-AMPs JOIN step from Spool 2 (Last Use) by way of an

all-rows scan, which is joined to Spool 3 (Last Use) by way of an

all-rows scan. Spool 2 and Spool 3 are joined using a hash join

of 10 partitions, with a join condition of ("RELATED_PK = PK").

The result goes into Spool 1 (group_amps), which is built locally

on the AMPs. The result spool file will not be cached in memory.

The size of Spool 1 is estimated with low confidence to be

36,760,336 rows (30,731,640,896 bytes). The estimated time for

this step is 24.07 seconds.The execution plan above indicates that both tables have their rows redistributed based on the hash code of the join column. No WHERE condition is applied. Therefore, a full table scan is necessary. The retrieve step estimations are with high confidence.

Enhancing the Teradata Column Selection

In the second query, we choose to retrieve only a single column:

EXPLAIN SELECT

t01.BOI_CODE

FROM

TheDatabase.TheTable1 t01

INNER JOIN

TheTable2 t02

ON

t01.RELATED_PK = t02.PK

;3) We execute the following steps in parallel.

1) We do an all-AMPs RETRIEVE step from TheDatabase.TheTable2

in view TheDatabase.TheTable2 by way of a traversal of index #

8 without accessing the base table with no residual

conditions into Spool 2 (all_amps) fanned out into 2 hash

join partitions, which is redistributed by the hash code of (

TheDatabase.TheTable2.PK) to all AMPs. The size of

Spool 2 is estimated with high confidence to be 16,206,188

rows (275,505,196 bytes). The estimated time for this step

is 1.62 seconds.

2) We do an all-AMPs RETRIEVE step from TheDatabase.t01 by way

of an all-rows scan with no residual conditions into Spool 3

(all_amps) fanned out into 2 hash join partitions, which is

redistributed by the hash code of (

TheDatabase.t01.RELATED_PK) to all AMPs. The input table

will not be cached in memory, but it is eligible for

synchronized scanning. The size of Spool 3 is estimated with

high confidence to be 25,914,926 rows (647,873,150 bytes).

The estimated time for this step is 8.72 seconds.

4) We do an all-AMPs JOIN step from Spool 2 (Last Use) by way of an

all-rows scan, which is joined to Spool 3 (Last Use) by way of an

all-rows scan. Spool 2 and Spool 3 are joined using a hash join of 2 partitions, with a join condition of ("RELATED_PK = PK").

The result goes into Spool 1 (group_amps), which is built locally

on the AMPs. The size of Spool 1 is estimated with low confidence

to be 36,760,336 rows (1,066,049,744 bytes). The estimated time

for this step is 1.85 seconds.The execution plan for the second query has been significantly optimized.

In both scenarios, 16,206,188 rows are retrieved from “TheTable2”; however, the spool size is considerably smaller since only the necessary columns are included (275,505,196 as opposed to 1,798,886,868 bytes). This reduction also applies to the spool of “TheTable1” (647,873,150 bytes compared to 30,731,640,896 bytes).

The join operation now requires only two hash join partitions, down from the original 10.

When the column from “TheTable2” is not selected, the optimizer favors the NUSI over the base table. This preference would not hold if columns from “TheTable2” were part of the result set. The NUSI’s usage is evident from the reduced spool size for “TheTable2.”

Take note of the diminished resource consumption outlined below. The resource requirements for the second query are considerably lower than the first: it demands 75% less I/O, 77% fewer CPU seconds, and an impressive 87% reduction in spool space.

| NumResultRows | IOCount | CPUTime | Spool space | |

| One Column | 47.314.937,00 | 118.289,00 | 120,63 | 2.367.786.496 |

| SELECT * | 47.314.937,00 | 472.106,00 | 522,03 | 17.860.372.480 |

Navigating the Complexities of Deserialization Cost

In data processing, deserialization, or decoding, is critical in converting raw bytes into structured data types. This essential transformation involves taking a byte sequence, which typically stems from sources like files or network communication, and reassembling it into a more organized data format.

When you venture into the world of Teradata SELECT * queries, the database is tasked with deserializing every column, even those that might be superfluous to your particular use case.

This additional task can amplify computational overhead and ultimately hinder query performance. By strategically selecting only the required columns, you can effectively reduce the deserialization cost and boost the efficacy of your queries.

Large Objects

In Teradata optimization, SELECT * queries are a notable concern, primarily because not all columns are stored within the data block. Large columns, such as text or blobs, are tucked away in sub-tables and only retrieved upon request. These columns are frequently compressed, adding an extra layer of complexity to the query process.

When you embark on a Teradata SELECT * query that encompasses numerous blobs, you inadvertently impose an additional burden on the database. It must fetch the values from sub-tables, decompress them, and deliver the results to the client. Understanding these hidden challenges can refine your query strategy to ensure smoother performance and more efficient resource utilization.

Tuning the Table Design for Large Objects

Longer textual content, such as descriptions associated with other columns, is commonly stored in VARCHAR columns. These columns possess a maximum character capacity of 64,000 for the LATIN character set and 32,000 for the UNICODE character set. It is vital to consider the impact of extended text on query performance, as longer text inputs cause the number of rows per data block to decrease.

This inverse relationship between text length and rows per data block contributes to increased I/O-intensive full table scans, negatively affecting overall performance. By carefully evaluating text length in VARCHAR columns, you can implement more effective strategies and optimize your database operations, ensuring efficient resource usage and streamlined performance.

CLOBs (Character Large Objects) offer a more effective solution for storing lengthy strings, as they are housed in a separate sub-table. By isolating CLOBs from the main table, queries that require a full table scan can experience a substantial performance boost. This improvement occurs because Teradata reads fewer data blocks when the CLOB column is not selected, reducing I/O operations.

Consider the following example table, where each row’s “Desc” column contains detailed descriptions:

CREATE TABLE Varchar_Table

(

ID INTEGER NOT NULL,

COl1 CHAR(01),

Desc VARCHAR(30000)

) PRIMARY INDEX (ID);When a secondary index is not present, all data blocks must be transferred from the disk to the FSG cache, even if our interest lies solely in the content of the “ID” column. Considering that all rows encompass extensive textual data within the “Desc” column, this scenario significantly increases I/O operations.

SELECT ID FROM Varchar_Table WHERE COl1 = 'X' ;Altering the data type from VARCHAR to CLOB (Character Large Object) can substantially decrease resource consumption. This improvement is achieved by enabling more rows to be stored per data block and minimizing the amount of disk transfer required by not accessing the sub-table with the CLOB. Considering this data type change, you can optimize database performance and enhance resource efficiency:

CREATE TABLE CLOB_Table

(

ID INTEGER NOT NULL,

COl1 CHAR(01),

Desc CHARACTER LARGE OBJECT

) PRIMARY INDEX (ID);SELECT ID FROM Varchar_Table WHERE COl1 = 'X';Nevertheless, the best advice remains to avoid selecting unneeded columns altogether, as this practice always enhances query efficiency and resource management. Choosing an unneeded CLOB column in a SELECT * query harms performance. This selection necessitates reading both the base table and the sub-table containing the CLOB data. Here is a Teradata query example:

SELECT * FROM Varchar_Table WHERE COl1 = 'X' ;Network Cost Considerations

Before delivering the query result to the client, it must be serialized by the communication protocol supported by Teradata SQL. The greater the volume of data requiring serialization, the more CPU effort is needed. Once the bytes are serialized, they are transmitted via TCP/IP. As the number of segments to send increases, so does the transmission cost, ultimately impacting network latency.

Selecting all columns may necessitate deserializing large columns, such as blobs, which clients may never utilize.

Client Deserialization Costs

Upon receiving the raw bytes, the client application must deserialize the data into the language employed by the client, further contributing to overall processing time. The more data present in the pipeline, the slower this procedure is.

Summary

In summary, a Teradata SELECT * query encompasses numerous intricate processes, making it advisable only to select the fields required to minimize unnecessary overhead. Remember that if your table contains a limited number of columns with simple data types, the overhead of a SELECT * query may be inconsequential. However, adopting a selective approach to column retrieval in your queries is generally considered good practice.

Visit for general information on the SELECT statement:

Thank you so much Wenzlofsky for new article. I am member with dwhpro from last 6 years. Whenever I get email notification from you on Teradata related article , I won’t hesitate to open and read it irrespective of the situation. I always admire it.

Thank you very much.