Introduction

Numerous data warehouse projects fail due to the selection of an unfitting data model at the project’s outset.

I advocate for a normalized data model, but it has become unfashionable to store data in a third normal form or higher in recent years. This is likely due to the rise of unstructured data in the age of Big Data. As a result, structured data is typically stored in a data warehouse or denormalized. While denormalization may be appropriate in certain circumstances, it is often used as a temporary solution due to poor decisions in creating the physical data model.

This article examines denormalization techniques in situations where it is beneficial.

It is advisable to establish a normalized data model initially and only resort to denormalization if performance necessitates it. Denormalization must be carefully thought out and should not be executed based on intuition.

The impact of denormalization is uncertain, as its purpose is to enhance performance for particular applications. However, the repercussions on other applications are often unknown until it is too late. It is unacceptable to have an illogical data model without normalization.

Effects of denormalization

- Denormalization can improve the performance of the target application but degrade the performance of other applications. So we do precisely the opposite of what we want to achieve.

- A denormalized data model makes it challenging to develop new applications because a specialized model for a particular application usually does not fit others.

- Denormalization leads to DML (insert, update) anomalies. This is exactly what we wanted to prevent with a 3NF data model.

- Denormalization leads to higher costs of new applications due to increased complexity for the aforementioned reasons.

Having considered the drawbacks, let us delve into the methods employed for denormalization.

Denormalization techniques

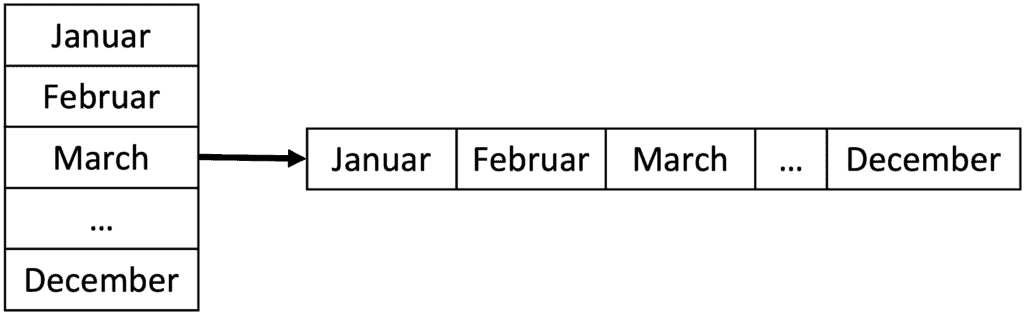

Repeating Groups

Repeated groups are avoided in a normalized data model since they breach 1NF. However, including them can save storage and prevent the need for joins. Let’s suppose we’re creating a table to contrast our clients’ end-of-month account balances. The present balance must be compared every month with the preceding month’s balance.

The most suitable approach is to store the information in a normalized table, where each row represents a unique customer-month combination.

To consolidate the necessary rows, we can either perform a self-join or employ an ordered analytic function. The performance may be enhanced by utilizing repeating groups. It is optimal to retain a singular row for each customer, containing the balance for each month in its corresponding column (BalanceJanuary, BalanceFebruary,…, BalanceDecember).

Advantages of Repeating Groups

- Increase performance

- Saves disk space

- Avoidance of analytical functions

Disadvantages of Repeating Groups

- DLL may need to be extended if not enough columns are provided. As a consequence also, an adaptation of the applications

- The reporting options are very limited to one application

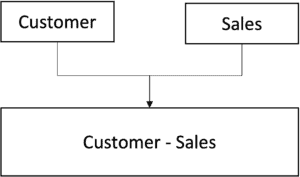

Prejoins of Tables or Join Indexes

Tables can be prejoined using two methods.

Teradata provides an alternative approach to manual prejoins and table storage in your ETL/ELT process through the use of Join Indexes.

Both prejoins offer benefits and drawbacks. Join indexes, however, can be challenging to convince the Teradata optimizer to utilize without ample experience. Nevertheless, they are system-maintained and may be an optimal choice for applications requiring a “set and forget” approach.

I suggest creating manual prejoins for my ETL/ELT tasks to ensure stable performance. Additionally, incorporating join indexes would support reporting tools like Microstrategy and Business Objects.

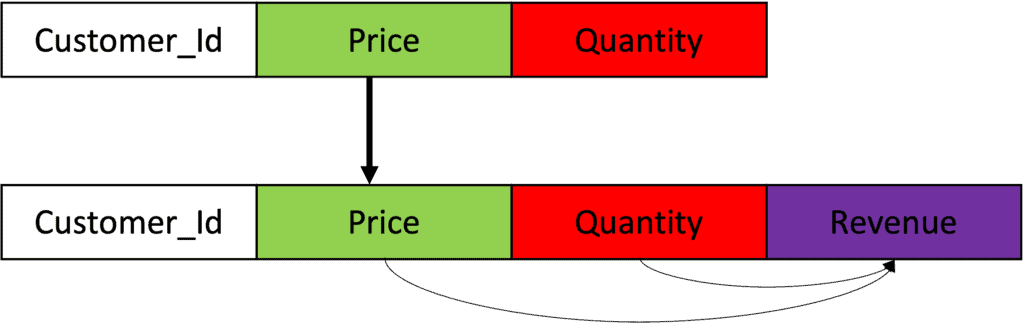

Derived Information

Storing pre-calculated data is a prevalent denormalization technique.

We would opt for this method if computing the necessary data is costly and performed regularly. For instance, we could retain the mean account balance computed across the past 12 months, thus eliminating the need for a pricey ordered analytical function to derive such information.

Besides prejoin, other options for derived data do not require denormalizing tables. These include Aggregate Join Index, Global Temporary Tables, and Views where the values are derived. If adequate performance can be attained, these approaches are the preferred choice.

Volatile Tables and Global Temporary Tables instead of Denormalization

When performance-enhancing techniques are necessary, one can turn to Global Temporary Tables and Volatile Tables as viable alternatives for denormalizing the PDM. These temporary database objects facilitate normalization while leaving the PDM untouched. As a result, one can avoid repeating groups, derived data, and prejoins on the PDM.

Conclusion

Although the aforementioned methods may assist in resolving performance problems, they should not be our initial option. Teradata provides various effective optimization tools, including row partitioning, secondary indexes, and join indexes. We must employ these tools primarily to eliminate performance issues. We should consider classical denormalization only if these techniques cannot achieve the desired outcome.

Denormalizing data should be avoided as it may seem like the easiest and most practical method to implement. However, it can lead to significant expenses in the long run due to issues such as redundant data and inconsistencies.

Several performance issues I had to address resulted from “intuitive denormalization,” which refers to denormalization as the easiest solution.