Teradata BTEQ is the earliest Teradata tool for executing SQL queries. It allows users to run queries and transfer data through import and export.

Teradata BTEQ provides greater functionality than Teradata SQL Assistant and is an indispensable tool for Teradata developers. BTEQ can be utilized interactively or to run batch scripts.

ETL-Tools versus BTEQ

BTEQ is a superior option to ETL tools, as the latter are typically misused and fail to exploit their theoretical benefits, including data lineage, fully. It is common for ETL tools to be used for scheduling purposes rather than their intended functionality.

Data lineage is often ignored in ETL tools due to performance concerns.

Using both BTEQ and an ETL tool in the ETL process results in losing the latter’s advantages. There are customers who solely opt to use an ETL tool due to their inability to load EBCDIC files in Linux because they don’t know how to use the iconv command.

Remember that SQL and Linux developers are plentiful, whereas finding experts in ETL tools can be challenging. This is particularly true for Ab Initio and similar vendors, which has limited documentation and a niche market.

Coding simple tasks in BTEQ takes only a few minutes, whereas implementing them in an ETL tool often takes much longer. This demonstrates that ETL tools do not necessarily enhance development manageability and efficiency, as is often claimed.

Based on my over twenty years of experience in data warehousing, I have not encountered any customers who have effectively utilized an ETL tool, regardless of the specific tool, resulting in tangible benefits.

ETL tools are generally useful, but untrained developers may negate their potential advantages.

I suggest verifying if Teradata’s tools, such as BTEQ and TPT Load, are inadequate for the required tasks.

Frequently Asked Questions about Teradata BTEQ

How is data imported and exported with BTEQ?

BTEQ is not a bulk load utility like Fastload, Multiload, or Fast Export. Data is exported or imported transactional and row by row. BTEQ import and export are, therefore, not suitable for large amounts of data.

Can Teradata BTEQ perform loops?

Loops are not possible in BTEQ.

Can I define my variables in BTEQ?

Variables cannot be defined. A common workaround is to use Linux parameters in the BTEQ scripts and replace them with a wrapper script.

What types of conditional logic does BTEQ handle?

We can use the “.GOTO” command with which we can jump to a specific label (indicated by “.LABEL” ).

Which methods for conditional statements and branches exist in BTEQ?

There are the conditional statements available like in most procedural languages:

IF, THEN, ELSE, ENDIF.

Can I determine how many rows are affected by an INSERT, UPDATE, or DELETE?

BTEQ returns the result in the following variables: INSERTCOUNT, UPDATECOUNT, ACTIVITYCOUNT These can be used in a “.GOTO” command, for example:

.IF UPDATECOUNT = 0 THEN .GOTO <NowRowsUpdated>

How is error handling implemented in a Teradata BTEQ script?

We can use the variable ERRORCODE, which is holding the result of each executed statement. This ERRORCODE can be used for different actions:

— Terminate the script and return error code 255 to the client

.IF ERRORCODE <> 0 THEN .QUIT 255

— If the step was successful, goto a certain label

.IF ERRORCODE <> 0 THEN .GOTO <NextStep>

Is there a possibility of executing Linux commands from BTEQ?

You can execute the command “.OS <linux_command>”.

Can I run another BTEQ from within BTEQ?

Yes, with the command “.RUN <other_bteq>”.

Teradata BTEQ Export

BTEQ enables exporting of data in various formats, including four of the most significant ones:

- EXPORT DATA:

Exports data in record mode.

You can use this format to exchange data between Fastload, Fast Export, Multiload, and BTEQ. - EXPORT REPORT:

The data is exported as a report in a readable format and contains the columns as headers. This is the default mode. - EXPORT INDICDATA:

NULL values are marked in this format - EXPORT DIF:

Creates a file in DIF (Data Interchange Format) format, which can be read on any Windows system.

A Teradata BTEQ Export Example

BTEQ export writes the Customer table in record mode to a text file:

.LOGON SYSTEM1/DWHPRO,DWHPRO_PWD

.EXPORT DATA FILE = c:\temp\dwhpro.txt

SELECT * FROM Customer;

.EXPORT RESET

.QUIT

.LOGOFFThe BTEQ export function writes the “Customer” table in report mode to a user-readable text file with column headers.

.LOGON SYSTEM1/DWHPRO,DWHPRO_PWD

.EXPORT REPORT FILE = c:\temp\dwhpro.txt

SELECT * FROM Customer;

.EXPORT RESET

.QUIT

.LOGOFFThe BTEQ export function writes the Customer table in report mode to a text file while utilizing the TITLE command to remove column headers:

.LOGON SYSTEM1/DWHPRO,DWHPRO_PWD

.SET TITLEDASHES OFF

.EXPORT REPORT FILE = c:\temp\dwhpro.txt

SELECT FirstName (TITLE ''), LastName (TITLE '')

FROM Customer;

.EXPORT RESET

.QUIT

.LOGOFFTo generate a comma-separated text file, follow these steps:

.LOGON SYSTEM1/DWHPRO,DWHPRO_PWD

.SET TITLEDASHES OFF

.EXPORT REPORT FILE = c:\temp\dwhpro.txt

SELECT FirstName|| ',' || LastName (TITLE '')

FROM Customer;

.EXPORT RESET

.QUIT

.LOGOFFA Teradata BTEQ Import Example

A file in record mode can be imported via BTEQ. The command “REPEAT” directs the reading of all lines.

.LOGON SYSTEM1/DWHPRO,DWHPRO_PWD

.IMPORT DATE FILE = c:\temp\dwhpro.txt

.REPEAT *

USING (FirstName VARCHAR(200), LastName VARCHAR(200))

INSERT INTO Customer VALUES (:FirstName,:LastName);

.QUIT

.LOGOFFImport a CSV file by separating the text with commas. Ensure that all columns are defined as VARCHAR during the import process.

.LOGON SYSTEM1/DWHPRO,DWHPRO_PWD

.IMPORT VARTEXT FILE = c:\temp\dwhpro.txt

.REPEAT *

USING (FirstName VARCHAR(200), LastName VARCHAR(200))

INSERT INTO Customer VALUES (:FirstName,:LastName);

.QUIT

.LOGOFFTeradata BTEQ Sessions

Multiple sessions are analogous to multiple users with identical credentials. Each session is associated with the same user and has the ability to execute one or more consecutive transactions.

.SET SESSIONS 100

.LOGON SYSTEM1/DWHPRO,DWHPRO_PWD

<...>

.LOGOFFUtilizing multiple sessions can enhance the efficiency of a BTEQ script.

Multiple sessions are only applicable for data access through PI or USI. While using several sessions in BTEQ imports may be appropriate, it is not recommended for BTEQ exports.

For additional information, please refer to the following detailed report.

Teradata BTEQ Fast Path Insert

When multiple INSERT statements target the same table with identical primary indexes on both source and target tables, it is advisable to use the following technique. This method circumvents the transaction log when writing data to an empty table.

Place a semicolon at the beginning of the lines (“;INSERT INTO”) as shown in the following example

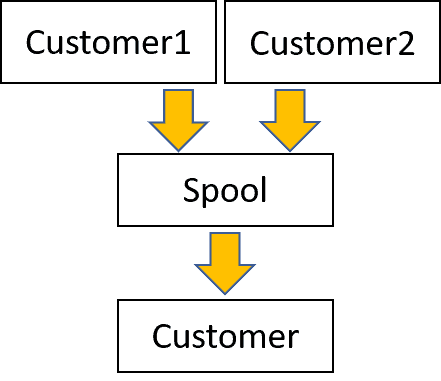

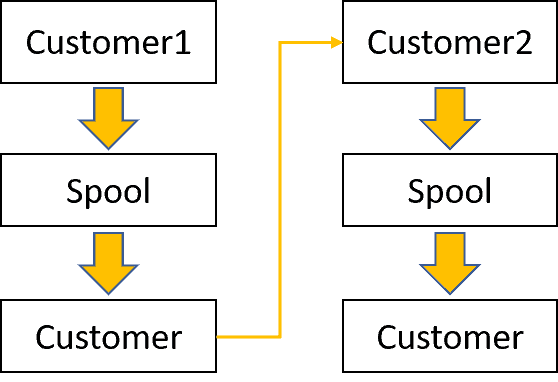

.SET SESSIONS 100

.LOGON SYSTEM1/DWHPRO,DWHPRO_PWD

INSERT INTO CUSTOMER SELECT * FROM Customer1

;INSERT INTO CUSTOMER SELECT * FROM Customer2;

.LOGOFFThe following figure illustrates the contrast between the Slow Path INSERT operation when the comma does not appear at the beginning of a line and when the tables have different primary indexes.

BTEQ FAQ

What is BTEQ in Teradata?

BTEQ, or Basic Teradata Query, is a command-driven utility that allows users to interact with Teradata databases. It is a popular tool for querying, importing, and exporting data within the Teradata environment. BTEQ is designed for batch processing, making it a powerful and flexible tool for executing SQL queries and scripts, generating reports, and automating tasks across different platforms.

What is the difference between Teradata SQL Assistant and BTEQ?

Teradata SQL Assistant and BTEQ are tools for interacting with Teradata databases, but they have distinct features and different purposes. Teradata SQL Assistant is a graphical user interface (GUI) that allows users to write, edit, and execute SQL queries and import and export data. It is primarily designed for ease of use and is more suitable for users who prefer a visual approach to database management.

BTEQ, on the other hand, is a command-line utility that is more suitable for batch processing and automation. It is a more powerful and flexible tool for advanced users who are comfortable working with command-line interfaces. While Teradata SQL Assistant offers a user-friendly experience, BTEQ provides greater control and customization options for executing SQL queries and scripts.

What is a BTEQ command?

A BTEQ command is a text-based instruction to perform a specific action or operation within the BTEQ environment. BTEQ commands can be divided into two categories: SQL commands and BTEQ-specific commands. SQL commands are standard SQL statements that can be executed in BTEQ, such as SELECT, INSERT, UPDATE, and DELETE. BTEQ-specific commands are unique to the BTEQ utility and provide additional functionality, such as controlling the formatting of query results, managing error handling, and setting environment variables.

Some examples of BTEQ-specific commands include:

.LOGON: Used to log on to a Teradata database.LOGOFF: Used to log off from a Teradata database.SET: Used to configure BTEQ settings, such as error handling and output formatting.EXPORT: Used to export query results to a file

How to run BTEQ in Teradata?

To run BTEQ in Teradata, follow these steps:

- Open a command prompt or terminal window on your computer.

- Connect to the Teradata database by entering the BTEQ command followed by your database credentials in the following format:

bteq .LOGON your_database/your_username,your_password - After successfully logging in, you can start executing SQL commands and BTEQ-specific commands.

- To execute a command, type the command and press Enter.

- To execute multiple commands, you can create a script file containing the commands and then run the script in BTEQ using the command:

bteq < script_filename - To exit BTEQ, type

.QUITand press Enter.

Please see the Teradata BTEQ documentation linked below:

https://docs.teradata.com/r/Basic-Teradata-Query-Reference-17.20/Introduction-to-BTEQ