Teradata Query Parallelism

A query on a Teradata system runs parallelly in every step, whether for joining, sorting, or aggregating data.

Teradata’s uniqueness lies in its ability to apply parallelism at every step of the query process. This advantage resulted from its architectural design, which integrated high levels of parallelism from the start, even when most components were hardware-based. As a result, Teradata has a significant advantage over other database systems.

The architecture of Teradata has enabled the implementation of additional features to enhance parallelism over time. Without it, many advancements would not have been achievable.

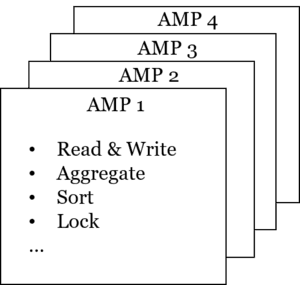

Parallel Execution across the AMPs

AMPs are autonomous procedures that execute diverse tasks independently. Every SQL query is initially divided into subtasks and allocated to the AMPs. Each AMP accomplishes its designated task and issues a partial outcome. Upon completion of all AMPs, the final result is returned.

The AMP level’s parallelism is a primary factor contributing to the exceptional performance of a properly utilized Teradata System.

AMPs are versatile and able to perform any task without specialization.

Numerous AMPs are presently accessible on every Teradata System.

The Tasks of a Teradata AMP

- Reading of Rows

- Writing of Rows

- Row Locking

- Sorting

- Aggregating

- Index creation and maintenance

- Maintaining the transaction log

- Backup and Recovery

- Bulk and Transactional Loading

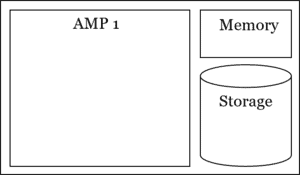

The Components of a Teradata AMP

Every AMP possesses unique resources.

- Logical storage unit

- Memory

- CPU

Teradata’s shared-nothing architecture assigns all resources exclusively to each AMP. Expanding the system with additional hardware enables linear growth in performance.

The Teradata Primary Index – Hash Partitioning

Hash partitioning achieves parallelism by evenly distributing data among AMPS, ensuring each AMP performs equally.

Hash partitioning efficiently distributes large data sets to AMPs, but imbalanced task distribution can create bottlenecks on individual AMPs, resulting in skewing and increased pressure.

Addressing skew is a primary concern for a performance tuner working on a Teradata system.

To implement hash partitioning, designate one or more columns in each table to calculate a hash value, determining the corresponding AMP for a given row. These columns are referred to as the Primary Index.

CREATE TABLE Customer(Customer_ID BIGINT NOT NULL,Lastname VARCHAR(500),Firstname VARCHAR(500)) UNIQUE PRIMARY INDEX (Customer_Id);Teradata Pipelining Steps

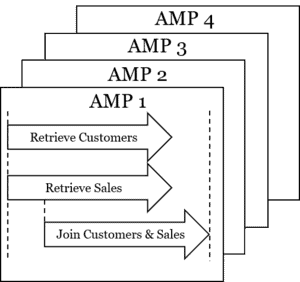

Pipelining further enhances query parallelism by allowing steps to begin before their predecessors are complete.

A request consists of multiple subtasks, as previously mentioned. These subtasks can include, for instance:

- Read all rows of a table (simple step)

- Update a subset of table rows (simple step)

- Read two tables, redistribute them, and join them (complex step)

Steps can have different complexity, and AMPs may also need to interact with each other.

Through pipelining, the join process can commence while the involved tables’ rows are still being redistributed, despite its complexity.

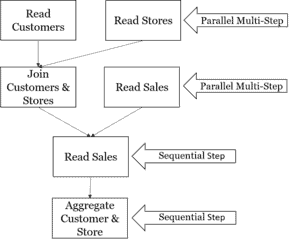

Teradata Parallel Multi-Steps

Pipelining involves nested steps where the predecessor’s input is readily accessible to the successor. Additionally, Teradata offers an extra layer of parallelism.

Independent steps can be executed simultaneously.

The Teradata BYNET

Teradata operates on a shared-nothing architecture where work is executed in parallel by the AMPs.

To facilitate communication among AMPs, the BYNET network serves as the medium for exchanging messages and data.

The Tasks of the BYNET

BYNET is not just an ordinary network; it possesses unique features tailored specifically for Teradata.

- Message Delivery: Guarantees that messages arrive at the target AMP

- Coordinate multiple AMPs working on the same step

- Sorting of the final result set when sending to the client

- Minimizes the number of AMPs needed for a step

- Congestion control to avoid an overloaded network

Message Passing & the BYNET

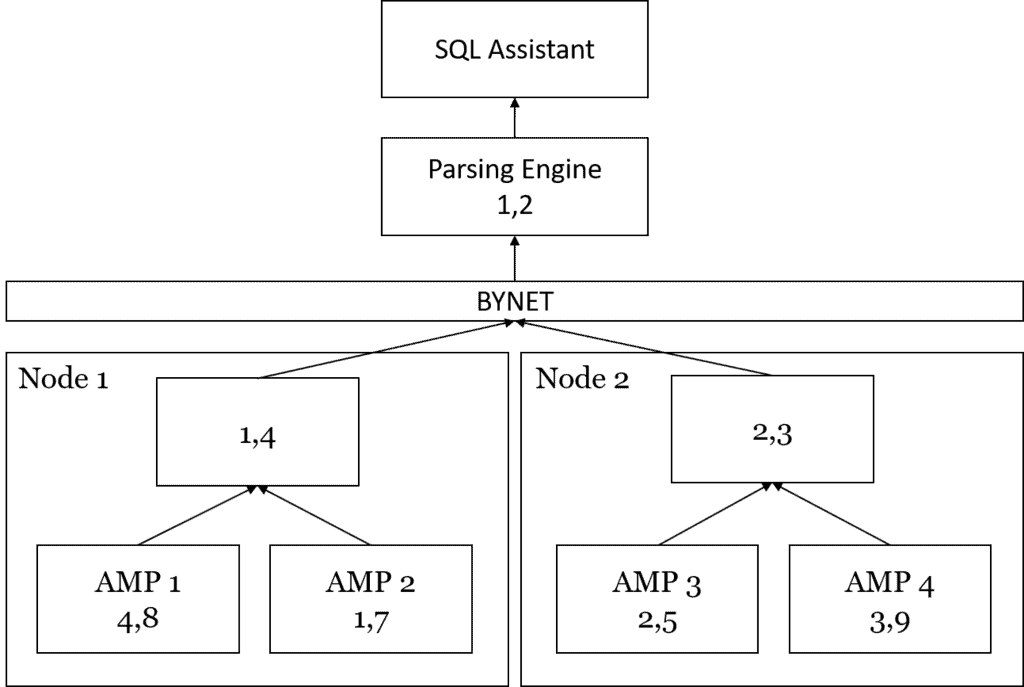

To comprehend the functions of BYNET, it is imperative to introduce a novel virtual process known as the Parsing Engine, which is accountable for generating the execution plan of a request. BYNET serves as the intermediary between the Parsing Engine and the AMPs.

Messages can be sent from the Parsing Engine via BYNET to the AMPs, but BYNET is also responsible for the AMP-to-AMP communication.

BYNET can transmit messages to all or a selected group of AMPs.

Sorting the Final Answer Set

What sets Teradata apart from others?

The ResultSet is parallelly sorted, with pre-sorting at each level (AMP, Node, BYNET, Parsing Engine) to prevent costly sorting at the end.

- Each AMP locally sorts its data (this is done in parallel)

- Each Node takes one buffer of data from all its AMPs and sorts it (buffer by buffer by AMP)

- The BYNET passes one buffer per Node to the Parsing Engine, which does the final sort.

The Teradata Shared Nothing architecture comprises these vital elements. Further information regarding data storage is available here: