Numerous arguments support the use of in-memory databases. Without memory limitations, retaining all data in memory would enable the swiftest access, a fact beyond dispute.

As we know, there is a significant contrast in access times between memory and hard disks or solid-state drives (SSD), and this disparity has existed in the past and will persist.

Although in-memory databases such as SAP HANA provide the most rapid data access, Teradata opted to follow the 80-20 rule, which asserts that only 20% of the data is highly accessed. From a cost perspective, maintaining this frequently accessed data in memory suffices. Less frequently accessed data is stored on slower storage, although the actual distribution may differ slightly from the 80-20 ratio.

Teradata categorizes data by its access frequency into cold, warm, hot, and very hot. This classification system is known as Teradata Intelligent Memory.

Teradata Intelligent Memory is now accessible for all systems operating on Teradata 14.10.

Understanding the principles of “data temperature” and its correlation with storage type requires knowledge of the data storage mechanisms employed in a Teradata system.

Teradata AMPs must have data blocks in memory before operating on rows. As a result, each AMP has its own FSG cache. In Teradata 14.10 and beyond, AMPs have supplementary memory for frequently accessed data. Blocks of data transferred to this memory are potentially retained for several days.

If solid-state drives are an option, they would be the optimal choice due to their superior speed compared to traditional hard disks.

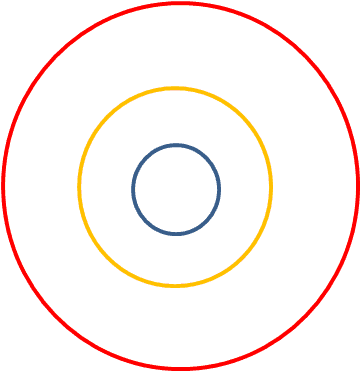

The diagram illustrates the configuration of a hard drive, where a circle represents every disk cylinder.

Data blocks are stored in cylinders, and a full cylinder can be read into memory with one spin. Teradata can store more data blocks in the outer (red) cylinder than the inner (blue) cylinder, allowing one to read more blocks from the outer cylinder into memory with one spin.

Teradata optimizes data storage by placing cold data in the inner cylinders and hotter data in the outer cylinders.

Teradata’s memory strategy follows a hierarchy, taking into account the position of different cylinders. The hierarchy includes memory, SSD, and disks.

Teradata’s solution offers a reasonable compromise between storage limitations inherent in in-memory databases and performance, albeit not quite as speedy as true in-memory solutions.

I have some basic questions.

if you can help that will be really helpful.

Question 1

Memory used by AMP

Vdisks

Ram

Spool Space

FSG Cache

Please confirm

Question 2

Is FSG Cache memory is same as Spool space where data is moved when Joins takes Place

Question 3

Each AMP has its own RAM, So Ram is not FSG Cache memory. Its memory is fixed in AMP or it is also decided by PDE as same is done for FSG

Question 4

Initially, Data is distributed across AMPs so where we store this data on . is its Vdisk or Pdisks. Vdisk is a combination of Pdisks ?

Question 5

If Possible can please someone provides an image for what inside an AMP?

That’s more like catching up with multi-temperature data management that DB2 10.1 has introduced in 2012 already.

Now DB2 with BLU is again a step further it offers true in-memory capability as SAP HANA does. In-memory is more than just storing the records in RAM. It is also about applying intelligent features to reduce the data volume that has to keep in memory resp. pushed through the execution pipelines of the CPUs. That’s why data skipping, columnar storage and sort-order preserving compression algorithms like Huffmann encoding (IBM calls it “actionable compression) and SIMD processing play a critical role in true in-memory databases. Unfortunately, Teradata is not yet there.