Teradata Load Times vs. Snowflake Load Times

Elasticity is a crucial aspect of contemporary cloud databases like Snowflake that sets them apart from on-premise shared-nothing databases like Teradata.

The lower cost (“pay merely what you need”) is one of the main advantages. I think this is a very one-sided approach. Databases like Snowflake must first prove how they compare to Teradata regarding many parallel queries, and Snowflake has to be scaled up accordingly.

Selecting a winner is a complex task as it relies heavily on the workload. Broad statements can be made to support this notion.

This blog post will demonstrate how Snowflake enables accelerated ETL processes without increasing costs under the current pricing model and without delving into the full extent of total ownership expenses.

I will demonstrate why accelerating the ETL process with Teradata is challenging. However, it should be noted that the assertion is not that Snowflake has a lesser total cost of ownership.

Elasticity expands the potential of the ETL process beyond what is possible with on-premise systems such as Teradata on-premises or Netezza, as well as Teradata in the cloud.

The ETL Process

Consider a typical ETL process that involves reading data from a source, transforming it, potentially historizing it, and ultimately writing it to the target database.

Some ETL jobs/scripts have dependencies, but others can be executed concurrently since they lack dependencies.

What is the typical arrangement nowadays? Multiple ETL scripts are executed concurrently in a batch on either an ETL server or a cluster of servers.

Sizing The Compute Node Cluster in Snowflake

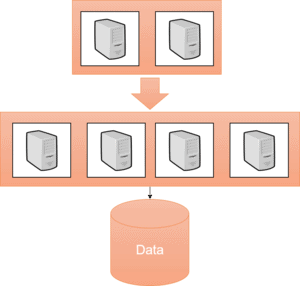

Is it possible to expedite the loading procedure without increasing expenses? One feasible solution entails twofold augmenting the quantity of compute nodes within a Snowflake cluster.

Assuming a billing system based on minutes, we can cut the loading time in half, which Snowflake has achieved. Therefore, we can complete the loading process in half the time without incurring any extra cost.

This model’s success depends on the method of charging for cloud resource utilization.

Although we may not have a cost advantage, we have the ability to control our loading process within Snowflake flexibly.

Ideally, the loading time can be decreased at no extra expense.

When multiple jobs are executed at the end of a monthly load, it may be necessary to utilize additional compute nodes to meet service-level agreements. However, Teradata does not have this capability, which will be further elaborated on later.

Adding Additional Compute Node Clusters in Snowflake

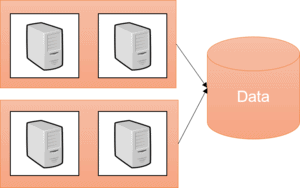

An alternative option is to execute the scripts on several autonomous clusters.

Reducing contention will shorten runtimes compared to the previous setup, which involved adding more compute nodes to the cluster.

Each cluster’s jobs can now utilize its dedicated resources.

It is crucial to remember that jobs in a cluster compete for CPU, memory, and IO resources, which requires significant effort.

Why Teradata Is Not Suitable

Initiating additional compute nodes incurs expenses. Due to its ability to accomplish this in minutes, Snowflake is exceptionally adept at adapting to fluctuating workloads.

Teradata’s flexibility is limited in the cloud due to its shared-nothing architecture, which hinders real-time scalability, despite its inherent scalability.

As previously stated, this does not address the overall ownership expenses, and I believe costs cannot be universally defined.

Snowflake’s marketing is deceptive because it claims to have a significantly lower total cost of ownership (TCO) than Teradata.

On the contrary, Teradata asserts that Snowflake requires numerous additional compute nodes to achieve optimal performance in the parallel execution of many competing requests.

Both of their marketing strategies are flawed, as the final outcome is contingent on the amount of work involved.

For further information, kindly visit the Snowflake official website: