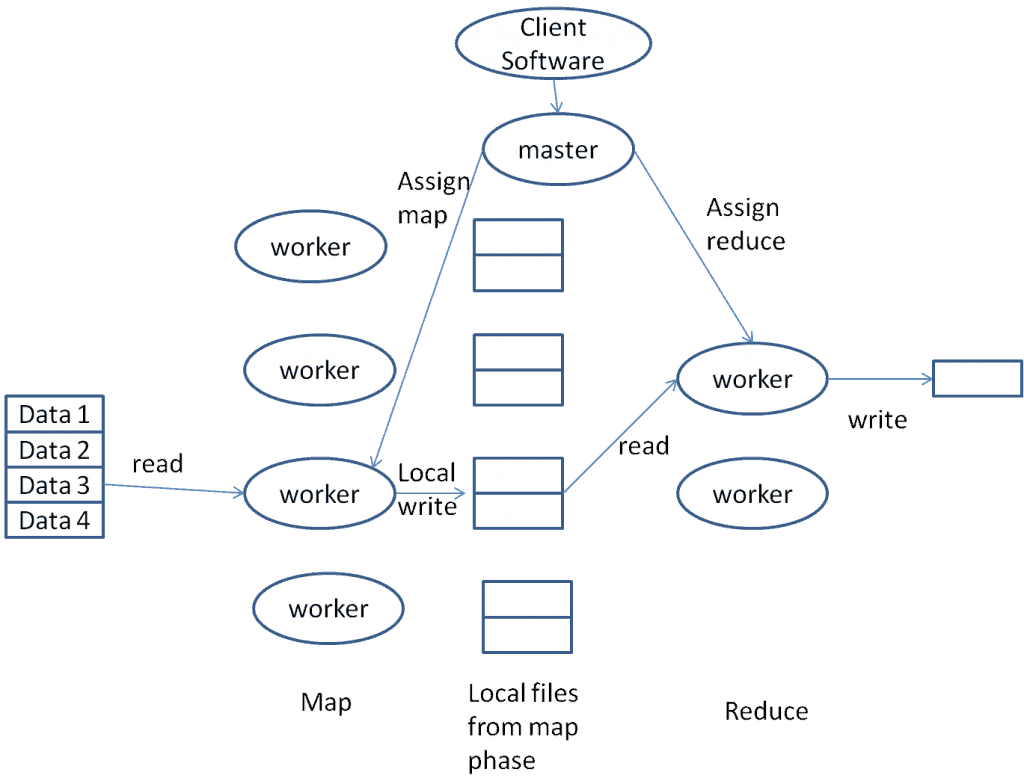

Here is an illustration depicting the design of real-world map-reduce implementations, such as Hadoop:

The input files reside in a distributed file system, such as HDFS for Hadoop and others, or GFS as termed by Google.

Worker processes handle mapper or reducer tasks.

The mapper accessed data from the HDFS, applied the mapping function, and saved the output on their local disks.

Whenever a mapper process has completed processing a specific reduce key (recall that the output of the map phase consists of key/value pairs), it notifies the master process of its readiness.

The master process is crucial as it assigns completed keys to various reducer processes, as illustrated below.

Each process that handles a task can assume either a mapper or a reducer task, as they are not specialized.

When the master process notifies a reducer process of an available reduce key, it also receives the corresponding mapper (and its local disk) from which the data can be retrieved.

A reducer task must wait for all mapper processes to deliver data for a specific key before it can begin processing.

The master process is crucial in maintaining records and verifying if all mapper tasks have provided data for a specific key, which is a prerequisite for the reduce task to commence its work on said key.

MapReduce provides superior fault tolerance to traditional database systems, making it a significant advantage of the paradigm and its real-world implementations.

Consider a conventional database system such as Teradata.

Despite the various fault tolerance features incorporated in a Teradata System, it isn’t easy to conceive of its scalability to hundreds of thousands of processes.

Statistical probability guarantees long-term failure.

Let’s try a straightforward example:

With a 1% probability of failure for each process, the chance of one process failing among hundreds of thousands is approximately 100%. Therefore, failure is certain in such a vast system that may become more prevalent in the realm of Big Data.

Map Reduce implementations prioritize fault tolerance.

The aforementioned statistical rules apply to Map Reduce. Picture a Map-Reduce assignment generating numerous mapper and reducer processes. This assignment can operate for extended periods, processing large quantities of data while performing intricate computations, such as pattern recognition.

A master process regularly communicates with all mapper and reducer processes and logs the processed key ranges for each task.

If a mapper fails, the master task assigns the entire key range to another mapper, which accesses the HDFS and repeats the same range.

If a reducer malfunctions, the aforementioned approach is utilized, and a fresh reducer is assigned to the task. However, previously generated output keys need not be reprocessed.

The master process monitors all activities within the MapReduce task and gracefully manages failures, even in systems with many processes.

Moreover, without actual malfunctions, the primary procedure identifies sluggish mappers or reducers and reassigns the key range they handle to a fresh process.

The master process is a single point of failure, as the failure of this process fails all MapReduce tasks.

Statistical probabilities must be considered.

Although the probability of failure for any of the numerous processes is nearly 100%, the likelihood of the specific master process failing is minimal. Consequently, Map Reduce implementations are fault-tolerant and better suited for large-scale tasks than conventional database implementations.