Introduction

In modern business, prompt response to opportunities and issues is crucial.

Detect and collect business events for prompt processing, generating either an alert for a front-line user or an update message for an operational system.

Teradata parallel database triggers handle live events precisely.

A dashboard function checks the queue table for significant events every X minutes, filtering out unimportant ones and identifying a single serious shipper delay that could impact profitability. This is accomplished through the use of Teradata triggers, stored procedures, and queue tables that are straightforward to program and connect to production applications.

How does a particular Queue table work?

It resembles typical base tables but possesses the distinct characteristic of operating as an asynchronous FIFO queue.

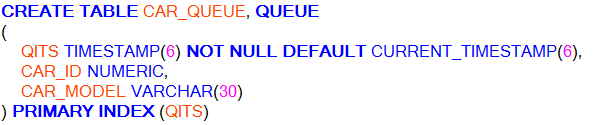

When you create a queue table, you must define a TIMESTAMP column QITS (Queue Insertion TimeStamp) with a CURRENT_TIMESTAMP default value.

The column values in the queue table indicate the insertion time of rows, unless the user provides alternative values.

[su_panel] [/su_panel]

[/su_panel]

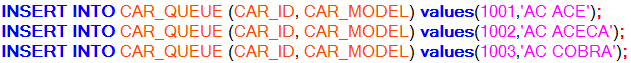

To add data to a database table, utilize an INSERT statement that functions as a first-in, first-out push.

[su_panel] [/su_panel]

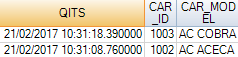

[/su_panel]The SELECT below statement operates similarly to a peek in a FIFO queue.

[su_panel]![]()

You can then use a SELECT AND CONSUME statement, which operates as a FIFO pop:

[su_panel]Data is returned from the row with the oldest timestamp in the specified queue table.The row is deleted from the queue table, guaranteeing that the row is processed only once.

![]()

![]()

We perform a peek operation to review the previous pop. The outcome is accurate: the initial record was properly consumed.

[su_panel]

If no rows are available, the transaction enters a delay state until one of the following actions occur:

• A row is inserted into the queue table

• The transaction aborts, either as a result of direct user intervention, such as the ABORT statement, or indirect user intervention, such as a DROP TABLE statement on the queue table.[/su_panel]

Queue Tables and Performance

We must remain vigilant about the Queue tables.

1. Each time the system performs a DELETE, MERGE, or UPDATE operation on a queue table, the FIFO cache for that table is spoiled. The next INSERT or SELECT AND CONSUME request performed on the table initiates a full‑table scan to rebuild the FIFO cache, which impacts performance. So, you should code DELETE, MERGE, and UPDATE operations only sparingly, and these should never be frequently performed operations.

– Swap out a queue table that has been spoiled. For example, if a queue table has had a delete operation performed, it is a purge candidate from the FIFO cache.

– Purge an inactive queue table from the FIFO cache.[/su_panel][su_panel]3. To optimize the distribution of your queue tables across the PEs, consider creating them all simultaneously.[/su_panel]

Limitations on Queue Tables

Creation or modification may not:

[su_panel]contain PPISee article: The Teradata Partitioned Primary Index (PPI) Guide[/su_panel][su_panel]have Permanent Journals

Remember that the purpose of a permanent journal is to maintain a sequential history of all changes made to the rows of one or more tables, and

protect user data when users commit, un-commit, or abort transactions.

See article: Teradata Permanent Journal[/su_panel][su_panel]contain any Large Object(LOB) data[/su_panel][su_panel]contain References or Foreign Keys[/su_panel]

Hi Jiri, you’re welcome, thank you for the question!

Teradata uses QITS column to maintain the FIFO ordering of rows in the queue table.

The queue table FIFO cache row entry is in fact a pair of QITS and rowID values for each row to be consumed from the queue, sorted in QITS value order. So, yes, for instance when you execute the query “Select and consume top 1 from queue_table” the system is able to retrieve the queue table row at the top of the FIFO queue from a single AMP.

Hi Stelvio, thank you very much for your reply! I’ve got a follow-up question, though. In which way does the FIFO cache affect the query performance of queue tables? Does it contain, for example, statistic-like information on QTIS column so the Teradata could perform a single-AMP data retrieval?

Thank you very much indeed Jiri.

Basically the Queue FIFO cache purpose is improve the performance for consuming rows from queue tables. The FIFO caches are created by the system during startup, and hold row information for a number of non‑consumed rows for each queue table. The Dipsatcher is responsible for maintaining this cache.

Hi Stelvio, great article! Just a question regarding the FIFO cache. What is its purpose and what does it contain? Thanks! 🙂