What is Query Logging with Teradata DBQL Tables?

Query Logging is a feature that allows us to analyze workload and resource usage and perform performance tuning.

How Is Query Logging Implemented?

The Query Logging Framework comprises a set of DBC tables known as the Teradata DBQL tables, storing performance indicators for groups of requests and request steps. By configuring the settings, we can select which key figures to store and their level of detail.

Does the information in the DBQL tables represent 100% of the workload of a Teradata system?

No, because we can define which information should be stored and in which detail, as mentioned above. We can use filters for logging only the required information. Detailed information can be aggregated and is then only available in aggregated form.

For performance reasons, the collected information is not real-time in the DQBL tables but is cached in memory and written at intervals (e.g., every 10 minutes). If the system restarts, all information not yet written from the cache to the DBQL tables is lost.

The DBSCOntrol option “DBQLFlushRate” controls in which intervals the cache is written to the DBQL tables.

If we want to write the cache immediately, we can do it with the following command:

FLUSH QUERY LOGGING WITH ALL;

How is it determined how much information about which objects should be collected?

The following commands control query logging:

BEGIN QUERY LOGGING / END QUERY LOGGING

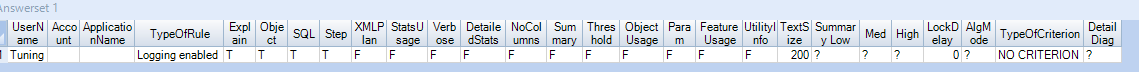

Teradata stores the precise logging rules in table DBC.DBQLRulesV.

How can I determine which rules are activated?

One way is by using this command:

SHOW QUERY LOGGING ON ALL;

Rule UserName “ALL” (From an ALL rule)

Rule UserId 00000000

Rule ZoneId FFFFFFFF

Account (Rule for any Account)

WITH NONE (No DBQL Logging)

Another possibility is to query the table with the rules:

SELECT * FROM DBC.DBQLRulesV;

Which users can enable or disable DBQL?

Only those who have EXECUTE permissions on the following macro:

GRANT EXECUTE ON DBC.DBQLAccessMacro TO DWHPRO;

Which DBQL tables should we permanently activate?

From experience, we recommend constantly activating DBQL for all database objects. At least for the table, DBQLOGTBL, DBQLOBJLOG, and DBQLSQLTBL should be logged entirely and without any filter:

BEGIN QUERY LOGGING WITH SQL, OBJECTS LIMIT SQLTEXT=0 ON ALL;

Teradata logs information for the above tables in a very efficient (caching) way, and the impact on system performance is negligible.

We should turn on logging on-demand for users, accounts, and applications. Below are two examples:

BEGIN QUERY LOGGING LIMIT THRESHOLD=100 CPUTIME AND SQLTEXT=5000 ALL ACCOUNT = ‘Account’;

BEGIN QUERY LOGGING LIMIT THRESHOLD=100 CPUTIME AND SQLTEXT=5000 ALL ON APPLNAME = ‘AppName’;

How should Query Logging for Tactical Queries be done?

Summary logging in DBQL is typically sufficient for optimized tactical queries and avoids the performance impact standard logging can have on a system with massive tactical queries. Still, standard logging can be beneficial in the optimization phase to check if our tactical queries are cached as expected (column “CacheFlag” in table DBC.DBQLOGTBL).

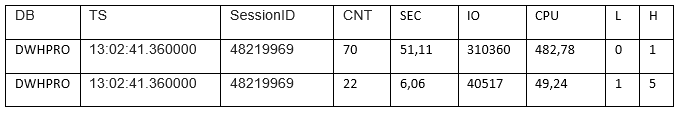

We want to recognize whether the runtimes are stable and in the single-digit second range for tactical queries. Summary logging is ideal for analysis. Here is an example where we divide the runtimes into three groups:

BEGIN QUERY LOGGING LIMIT SUMMARY = 1,5,10 ON DWHPRO;

With the below query, we can then analyze our tactical workload:

SELECT

t02.DATABASENAME AS DB,

t01.CollectTimeStamp AS TS,

t01.SESSIONID,

t01.QueryCount AS CNT,

t01.QuerySeconds AS SEC,

t01.TotalIOCount AS IO,

t01.AMPCPUTime AS CPU,

t01.LowHist AS L,

t01.HighHist AS H

FROM DBC.DBQLSUMMARYTBL t01

INNER JOIN

DBC.DATABASES2 t02

ON

t01.UserId = t02.DatabaseId

WHERE DATABASENAME = ‘DWHPRO’

ORDER BY T02.DATABASENAME,t01.CollectTimeStamp, t01.LowHist;

LowHist and HighHist define the seconds over which Teradata summed up a session’s query runtimes. Only records for a specific range are written if at least one query falls into it. QueryCount counts the number of queries per range; QuerySeconds is the summed-up execution time of all queries in a runtime range. Here is an example result set of the query shown above:

The Teradata DBQL Tables

The DBC database contains several table/view combinations for query logging.

- DBC.DBQLOGTBL and DBC.QryLogV

The table DBC.DBQLOGTBL stores one row per query with aggregated key figures like the number of CPU seconds, the number of IOs, and much more. - DBC.DBQLStepTbl and DBC.QryLogStepsV

The individual query steps are saved here, including the important key figures for each query system. - DBC.DBQLObjTbl and DBC.QryLogObjectsV

One row per database object used is stored here. - DBC.DBQLSqlTbl and DBC.QryLogSQLV

All SQL text is stored here in the common record for table DBC.DBQLOGTBL. One SQL text may require several rows per query. - DBC.DBQLSummaryTbl and DBC.QryLogSummaryV

Certain filters cause the information about requests not to be stored individually in DBC.DBQLOGBTBL, but only aggregated with other queries in this table. - DBC.DBQLExplainTbl and DBC.QryLogExplainV

Saves the individual steps of the Execution Plan - DBC.DBQLXMLTbl and DBC.QryLogXMLV

Saves the Execution Plan of the queries as XML - DBC.QryLogEventsV

Stores workload management events - DBC.QryLogExceptionsV

Stores workload management exceptions

Teradata DBQL Logging Levels

Teradata query logging can be enabled for all or selected users.

BEGIN QUERY LOGGING ON ALL;

BEGIN QUERY LOGGING ON DWHPRO;We can restrict query logging to an account or a particular account and user combination.

BEGIN QUERY LOGGING ON DWHPRO ACCOUNT = ‘TheAccount’;

Logging can be constrained to particular Teradata applications.

BEGIN QUERY LOGGING ON APPLNAME = ‘Application’;

The WITH Option

The “WITH” option specifies the specific account and user details we wish to record in the application log.

BEGIN QUERY LOGGING ON DWHPRO WITH

- NONE

Turn off query logging for the specific user, account, or application - ALL

Log DBC.DBQLOGTBL plus EXPLAIN, OBJECTS, SQL, STEPINFO - EXPLAIN

Log DBC.DBQLOGTBL plus the EXPLAIN text - OBJECTS

Log DBC.DBQLOGTBL plus the objects accessed - SQL

Log DBC.DBQLOGTBL plus the entire SQL text - STEPINFO

Log DBC.DBQLOGTBL plus all steps of the query - XMLPLAN

Log DBC.DBQLOGTBL plus the Explain Plan as XML - STATSUSAGE

Log DBC.DBQLOGTBL plus statistics usage

The LIMIT Option

The LIMIT parameter dictates the amount of data that is retained.

- SQLTEXT

Determines the length of the SQL text which is stored. The default is 200 characters. It can be between 0 and 10,000 characters. - SUMMARY

It does not log into DBC.DBQLOGTBL and only writes counts of queries each 10 minutes - THRESHOLD

Teradata generates rows in DBC.DBQLOGTBL depends on certain thresholds (CPU seconds, etc.) defined. If the measure is below the threshold, Teradata only writes a summary row into DBC.DBQLSummaryTbl

Difference Between SUMMARY And THRESHOLD

Both logging types can utilize extra filters such as seconds, milliseconds, CPU and normalized CPU milliseconds, and the number of IOs.

With SUMMARY, these filters affect the queries in a group. Teradata saves the information only in table DBC.DBQLSummaryTbl.

SUMMARY = n1, n2, n3 [ELAPSEDSEC, ELAPSEDTIME, CPUTIME, CPUTIMENORM or IOCOUNT]

THRESHOLD filters direct queries to either DBC.DBQLOGTBL or DBC.DBQLSummaryTbl.

THRESHOLD = n [ELAPSEDSEC, ELAPSEDTIME, CPUTIME, CPUTIMENORM or IOCOUNT]

Query Logging Examples

BEGIN QUERY LOGGING ON ALL; Logs default row for all users, accounts, and applications.BEGIN QUERY LOGGING WITH NONE ON DWHPRO; Logs default row for all users but excludes DWHPRO in a second step.BEGIN QUERY LOGGING WITH NONE ON APPLNAME = ‘FASTLOAD’; Logs all requests of all fastloads.BEGIN QUERY LOGGING WITH NONE LIMIT THRESHOLD = 500 CPUTIME ON ALL; Logs default row for all queries above 100 CPU milliseconds; otherwise, counts the query in the DBC.DBQLSummaryTbl.

BEGIN QUERY LOGGING LIMIT SUMMARY = 100, 1000, 2000 CPUTIME ON ALL;

Logs in DBC.DBQLSummaryTbl in 4 different groups

- The query takes up to 100 milliseconds

- The query takes up to 1000 milliseconds

- The query takes up to 2000 milliseconds

- The query takes more than 2000 milliseconds

BEGIN QUERY LOGGING LIMIT THRESHOLD = 100 IOCOUNT ON ALL;

If a query requires more than 100 IOs, a default row should be logged in DBC.DBLOGTBL. Alternatively, if a query requires a maximum of 100 I/Os, it should be counted in DBC.DBQLSummaryTbl.

The Teradata DBQL Table Key Figures

The figures below are vital for optimizing queries through DBQL:

- Query Start Time and Query End Time

- Parsing Time of the Query

- Error Codes

- Total CPU Time (in centiseconds)

- Total IO Count

- Workload Delay Times

- The Query Text (truncated)

Performance indicators can be found in the DBQLOGTBL table, which is automatically enabled for logging on every Teradata system. It is often the only means of identifying metrics.

We use the DBQLStepTbl to obtain comprehensive and detailed data for each query step.

- Query Step Timings (Start and End Time of each step)

- Row Counts per Step

- The activity of each Step (Join, Redistribution, etc.)

- CPU and IO usage for each step

Access more information on Teradata DQBL through the official documentation by clicking the link provided: Teradata Official Documentation for DQBL.

What Are The Most Useful DBQL Performance Indicators?

The Teradata Performance Indicators provide diverse viewpoints of a query and an overview of its potential for enhancement. Access these indicators via the DBC.DBQLOGTBL table.

The DBQL Product Join Indicator

This indicator can detect undesired product joins, as implied by its name.

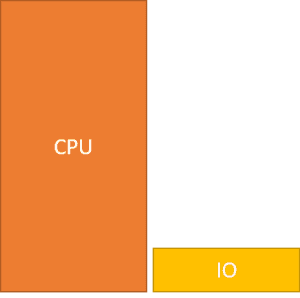

The product joins are identified by analyzing the CPU to I/O ratio. High values indicate a product join, as increased comparisons result in more IOs. You can use the following formula to calculate the indicator:

CASE WHEN TotalIOCount = 0 THEN 0 ELSE (AMPCPUTime * 1000)/TotalIOCount ENDIf the product join indicator exceeds 3, the execution plan for product joins should be examined.

A high product join indicator value can be attributed to other factors, such as:

- Aggregations of large amounts of data that pass through the memory. Since the aggregation occurs in memory, hardly any IOs are required but many CPU seconds.

- Duplicate rows check when there are a lot of hash collisions.

- Extensive string operations – often generated in the automatically generated queries of BI tools.

- Multivalue Compression (MVC) increases the product join indicator; since more rows fit into each data block when using MVC, fewer IOs are needed to copy the data from disk to memory. The previous explanation applies to systems without dedicated Block Level Compression (BLC) hardware. BLC needs a lot of CPU for decompressing the data

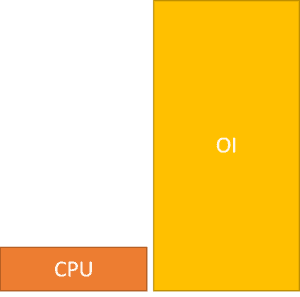

The DBQL Indicator For Wasted I/Os

This indicator is the opposite of the product join indicator in its calculation. Its purpose is to identify processes that require many I/O operations but use little CPU time. A high score indicates that Teradata is replicating large amounts of data, often due to incorrect primary index selection or missing indexes.

Below is the formula for the indicator:

CASE WHEN AMPCPUTime = 0 THEN 0 ELSE TotalIOCount/(AMPCPUTime * 1000) ENDSimilar to the indicator for joining products, it is crucial to inspect the execution plan when the value exceeds 3.

The DBQL CPU and IO Skew Indicators

The skew indicator is crucial in detecting imbalanced workloads that result in subpar performance on Teradata systems. It analyzes tables, joins, spools, and other potential causes of skewness.

To calculate CPU skew, use the following formula:

CASE WHEN (AMPCPUTime / (HASHAMP()+1) =0 THEN 0

ELSE MaxAmpCPUTime/(AMPCPUTime / (hashamp()+1)

ENDTo compute I/O skew, follow these steps:

CASE WHEN (TotalIOCount / (HASHAMP()+1) =0 THEN 0

ELSE MaxAmpIO/(TotalIOCount / (HASHAMP()+1)

END If indicator values are above 2, we should examine the execution plan for potential skew issues.

The DBQL CPU Impact Indicator

This indicator measures the effect of skew on system parallelism utilization.

We calculate the indicator using the following method:

MaxAmpCPUTime * (HASHAMP()+1)The higher the value, the worse the query.

DBQL Indicator Summary

These indicators have a significant advantage because they represent absolute values. If the execution plan remains constant, a query will consistently produce similar key figures.

DBQL Query Response Times

Runtime behavior is affected by various variables, which makes query runtimes an unsuitable performance-tuning indicator. Although it is a goal for optimization, it is not one of our primary objectives.

How do I export logs from Teradata to external system?Is there any method to do that ? if we can do it using teradata viewpoint please explain the procedure

Hi,

in the official VM I was not able to enable the logging in Teradata Studio Express, I go the error:

Executed as Single statement. Failed [9944 : HY000] DBQL Statements not allowed inside ANSI transactions and explicit Teradata (BTET) transactions.

The solution is to change the JDBC connection properties, TMODE=TERA

br

Fari